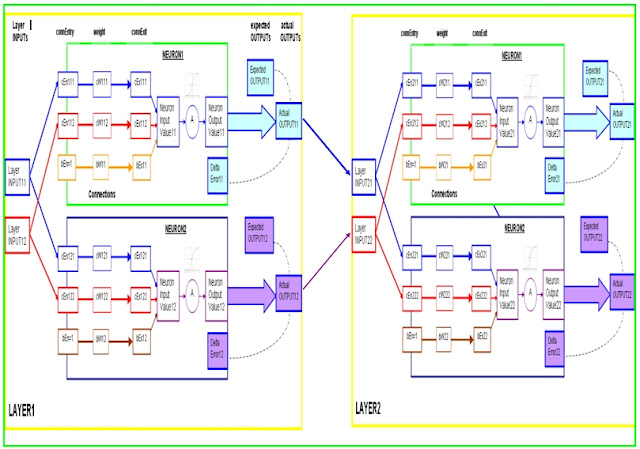

A worked example of a Back-propagation training cycle.

In this example we will create a 2 layer network (as seen above), to accept 2 readings, and produce 2 outputs. The readings are (0,1) and the expectedOutputs in this example are (1,0).

Step 1: Create the network

NeuralNetwork NN = new NeuralNetwork();

NN.addLayer(2,2);

NN.addLayer(2,2);

float[] readings = {0,1};

float[] expectedOutputs = {1,0};

NN.trainNetwork(readings,expectedOutputs);

This neural network will have randomised weights and biases when created.

Let us assume that the network generates the following random variables:

LAYER1.Neuron1

Layer1.Neuron1.Connection1.weight = cW111 = 0.3

Layer1.Neuron1.Connection2.weight = cW112 = 0.8

Layer1.Neuron1.Bias = bW11 = 0.5

LAYER1.Neuron2

Layer1.Neuron2.Connection1.weight = cW121 = 0.1

Layer1.Neuron2.Connection2.weight = cW122 = 0.1

Layer1.Neuron2.Bias = bW12 = 0.2

LAYER2.Neuron1

Layer2.Neuron1.Connection1.weight = cW211 = 0.6

Layer2.Neuron1.Connection2.weight = cW212 = 0.4

Layer2.Neuron1.Bias = bW21 = 0.4

LAYER2.Neuron2

Layer2.Neuron2.Connection1.weight = cW221 = 0.9

Layer2.Neuron2.Connection2.weight = cW222 = 0.9

Layer2.Neuron2.Bias = bW22 = 0.5

Step 2: Process the Readings through the Neural Network

a) Provide the Readings to the first layer, and calculate the neuron outputs

The readings provided to the neural network is (0,1), which go straight through to the first layer (layer1).

Starting with Layer 1:

Layer1.INPUT1 = 0

Layer1.INPUT2 =1

Calculate Layer1.Neuron1.NeuronOutput

ConnExit (cEx111) = ConnEntry (cEn111) x Weight (cW111) = 0 x 0.3 = 0;

ConnExit (cEx112) = ConnEntry (cEn112) x Weight (cW112) = 1 x 0.8 = 0.8;

Bias (bEx11) = ConnEntry (1) x Weight (bW11) = 1 x 0.4 = 0.4

NeuronInputValue11 = 0 + 0.8 + 0.4 = 1.2

NeuronOutputValue11 = 1/(1+EXP(-1 x 1.2)) = 0.768525

Calculate Layer1.Neuron2.NeuronOutput

ConnExit (cEx121) = ConnEntry (cEn121) x Weight (cW121) = 0 x 0.1 = 0;

ConnExit (cEx122) = ConnEntry (cEn122) x Weight (cW122) = 1 x 0.1 = 0.1;

Bias (bEx12) = ConnEntry (1) x Weight (bW12) = 1 x 0.2 = 0.2

NeuronInputValue12 = 0 + 0.1 + 0.2 = 0.3

NeuronOutputValue12 = 1/(1+EXP(-1 x 0.3)) = 0.574443

b) Provide LAYER2 with Layer 1 Outputs.

Now lets move to Layer 2:

Layer2.INPUT1 = NeuronOutputValue11 = 0.768525

Layer2.INPUT2 = NeuronOutputValue12 = 0.574443

Calculate Layer2.Neuron1.NeuronOutput

ConnExit (cEx211) = (cEn211) x Weight (cW211) = 0.768525 x 0.6 = 0.461115;

ConnExit (cEx212) = (cEn212) x Weight (cW212) = 0.574443 x 0.4 = 0.229777;

Bias (bEx21) = ConnEntry (1) x Weight (bW21) = 1 x 0.4 = 0.4

NeuronInputValue21 = 0.461115 + 0.229777 + 0.4 = 1.090892

NeuronOutputValue21 = 1/(1+EXP(-1 x 1.090892)) = 0.74855

Calculate Layer2.Neuron2.NeuronOutput

ConnExit (cEx221) = (cEn221) x Weight (cW221) = 0.768525 x 0.1 = 0.076853;

ConnExit (cEx222) = (cEn222) x Weight (cW222) = 0.574443 x 0.1 = 0.057444;

Bias(bEx22) = ConnEntry (1) x Weight (bW22) = 1 x 0.5 = 0.5

NeuronInputValue22 = 0.076853 + 0.057444 + 0.5 = 0.634297

NeuronOutputValue22 = 1/(1+EXP(-1 x 0.634297)) = 0.653463

Step 3) Calculate the delta error for neurons in layer 2

-Because layer 2 is the last layer in this neural network -

we will use the expected output data (1,0) to calculate the delta error.

LAYER2.Neuron1:

Let Layer2.ExpectedOutput1 = eO21 = 1

Layer2.ActualOutput1= aO21 = NeuronOutputValue21= 0.74855

Layer2.Neuron1.deltaError1 = dE21

dE21 = aO21 x (1 - aO21) x (eO21 - aO21)

= (0.74855) x (1 - 0.74855) x (1 - 0.74855)

= (0.74855) x (0.25145) x (0.25145)

= 0.047329

LAYER2.Neuron2:

Let Layer2.ExpectedOutput2 = eO22 = 0

Layer2.ActualOutput2 = aO22 = NeuronOutputValue22 = 0.653463

Layer2.Neuron2.deltaError = dE22

dE22 = aO22 x (1 - aO22) x (eO22 - aO22)

= (0.653463) x (1 - 0.653463) x (0 - 0.653463)

= (0.653463) x (0.346537) x (-0.653463)

= -0.14797

Step 4) Calculate the delta error for neurons in layer 1

LAYER1.Neuron1 delta Error calculation

Let Layer1.Neuron1.deltaError = dE11

Layer1.actualOutput1 = aO11 = NeuronOutputValue11 = 0.768525

Layer2.Neuron1.Connection1.weight = cW211 = 0.6

Layer2.Neuron1.deltaError = dE21 = 0.047329

Layer2.Neuron2.Connection1.weight = cW221 = 0.9

Layer2.Neuron2.deltaError = dE22 = -0.14797

dE11 = (aO11) x (1 - aO11) x ( [cW211 x dE21] + [cW221 x dE22] )

= (0.768525) x (1 - 0.768525) x ([0.6 x 0.047329] + [ 0.9 x -0.14797] )

= -0.01864

LAYER1.Neuron2 delta Error calculation

Let Layer1.Neuron2.deltaError = dE12

Layer1.actualOutput2 = aO12 = NeuronOutputValue12 = 0.574443

Layer2.Neuron1.Connection2.weight = cW212 = 0.4

Layer2.Neuron1.deltaError = dE21 = 0.047329

Layer2.Neuron2.Connection2.weight = cW222 = 0.9

Layer2.Neuron2.deltaError = dE22 = -0.14797

dE12 = (aO12) x (1 - aO12) x ( [cW212 x dE21] + [cW222 x dE22] )

= (0.574443) x (1 - 0.574443) x ([0.4 x 0.047329] + [ 0.9 x -0.14797] )

= -0.02793

Step 5) Update Layer_2 neuron connection weights and bias (with a learning rate (LR) = 0.1)

Layer 2, Neuron 1 calculations:

Let

Layer2.Neuron1.Connection1.New_weight = New_cW211

Layer2.Neuron1.Connection1.Old_weight = Old_cW211 = 0.6

Layer2.Neuron1.Connection1.connEntry = cEn211 = 0.768525

Layer2.Neuron1.deltaError = dE21 = 0.047329

New_cW211 = Old_cW211 + (LR x cEn211 x dE21)

= 0.6 + (0.1 x 0.768525 x 0.047329)

= 0.6 + ( 0.003627)

= 0.603627

Layer2.Neuron1.Connection2.New_weight = New_cW212

Layer2.Neuron1.Connection2.Old_weight = Old_cW212 = 0.4

Layer2.Neuron1.Connection2.connEntry = cEn212 = 0.574443

Layer2.Neuron1.deltaError = dE21 = 0.047329

New_cW212 = Old_cW212 + (LR x cEn212 x dE21)

= 0.4 + (0.1 x 0.574443 x 0.047329)

= 0.4 + (0.002719)

= 0.402719

Layer2.Neuron1.New_Bias = New_Bias21

Layer2.Neuron1.Old_Bias = Old_Bias21 = 0.4

Layer2.Neuron1.deltaError = dE21 = 0.047329

New_Bias21 = Old_Bias21 + (LR x 1 x de21)

= 0.4 + (0.1 x 1 x 0.047329)

= 0.4 + (0.0047329)

= 0.4047329

--------------------------------------------------------------------

Layer 2, Neuron 2 calculations:

Layer2.Neuron2.Connection1.New_weight = New_cW221

Layer2.Neuron2.Connection1.Old_weight = Old_cW221 = 0.9

Layer2.Neuron2.Connection1.connEntry = cEn221 = 0.768525

Layer2.Neuron2.deltaError = dE22 = -0.14797

New_cW221 = Old_cW221 + (LR x cEn221 x dE22)

= 0.9 + (0.1 x 0.768525 x -0.14797)

= 0.9 + ( -0.01137)

= 0.88863

Layer2.Neuron2.Connection2.New_weight = New_cW222

Layer2.Neuron2.Connection2.Old_weight = Old_cW222 = 0.9

Layer2.Neuron2.Connection2.connEntry = cEn222 = 0.574443

Layer2.Neuron2.deltaError = dE22 = -0.14797

New_cW222 = Old_cW222 + (LR x cEn222 x dE22)

= 0.9 + (0.1 x 0.574443 x -0.14797)

= 0.9 + (-0.0085)

= 0.8915

Layer2.Neuron2.New_Bias = New_Bias22

Layer2.Neuron2.Old_Bias = Old_Bias22 = 0.5

Layer2.Neuron2.deltaError = dE22 = -0.14797

New_Bias22 = Old_Bias22 + (LR x 1 x de22)

= 0.5 + (0.1 x 1 x -0.14797)

= 0.5 + (-0.014797)

= 0.485203

--------------------------------------------------------------------------

Step 6) Update Layer_1 neuron connection weights and bias.

Layer 1, Neuron 1 calculations:

Let

Layer1.Neuron1.Connection1.New_weight = New_cW111

Layer1.Neuron1.Connection1.Old_weight = Old_cW111 = 0.3

Layer1.Neuron1.Connection1.connEntry = cEn111 = 0

Layer1.Neuron1.deltaError = dE11 = -0.01864

New_cW111 = Old_cW111 + (LR x cEn111 x dE11)

= 0.3 + (0.1 x 0 x -0.01864)

= 0.3 + ( 0 )

= 0.3

Layer1.Neuron1.Connection2.New_weight = New_cW112

Layer1.Neuron1.Connection2.Old_weight = Old_cW112 = 0.8

Layer1.Neuron1.Connection2.connEntry = cEn112 = 1

Layer1.Neuron1.deltaError = dE11 = -0.01864

New_cW112 = Old_cW112 + (LR x cEn112 x dE11)

= 0.8 + (0.1 x 1 x -0.01864)

= 0.8 + (-0.001864)

= 0.798136

Layer1.Neuron1.New_Bias = New_Bias11

Layer1.Neuron1.Old_Bias = Old_Bias11 = 0.5

Layer1.Neuron1.deltaError = dE11 = -0.01864

New_Bias11 = Old_Bias11 + (LR x 1 x dE11)

= 0.5 + (0.1 x 1 x -0.01864 )

= 0.5 + (-0.001864)

= 0.498136

--------------------------------------------------------------------

Layer 1, Neuron 2 calculations:

Layer1.Neuron2.Connection1.New_weight = New_cW121

Layer1.Neuron2.Connection1.Old_weight = Old_cW121 = 0.1

Layer1.Neuron2.Connection1.connEntry = cEn121 = 0

Layer1.Neuron2.deltaError = dE12 = -0.02793

New_cW121 = Old_cW121 + (LR x cEn121 x dE12)

= 0.1 + (0.1 x 0 x -0.02793 )

= 0.1 + (0)

= 0.1

Layer1.Neuron2.Connection2.New_weight = New_cW122

Layer1.Neuron2.Connection2.Old_weight = Old_cW122 = 0.1

Layer1.Neuron2.Connection2.connEntry = cEn122 = 1

Layer1.Neuron2.deltaError = dE12 = -0.02793

New_cW122 = Old_cW122 + (LR x cEn122 x dE12)

= 0.1 + (0.1 x 1 x -0.02793)

= 0.1 + (-0.002793)

= 0.097207

Layer1.Neuron2.New_Bias = New_Bias12

Layer1.Neuron2.Old_Bias = Old_Bias12 = 0.2

Layer1.Neuron2.deltaError = dE12 = -0.02793

New_Bias12 = Old_Bias12 + (LR x 1 x de12)

= 0.2 + (0.1 x 1 x -0.02793)

= 0.2 + (-0.002793)

= 0.197207

----------------------------------------------------------------------

All done. That was just one training cycle. Thank goodness we have computers !

A computer can process these calculations really quickly, and depending on how complicated your neural network is (ie. number of layers, and number of neurons per layer), you may find that the training procedure may take some time. But believe me, if you have designed it right, it is well worth the wait.

Because once you have the desired weights and bias values set up, you are good to go, and as you receive data, the computer can do a single forward pass in a fraction of a second, and you will get your desired output, hopefully :)

Here is a complete Processing.org script that demonstrates the use of my neural network.

Neural Network (Part 7): Cut and Paste Code (click here).

If you liked my tutorial - please let me know in the comments. It is sometimes hard to know if anyone is actually reading this stuff. If you use my code in your own project, I am also happy for you to leave a link to a YouTube video etc in the comments also.