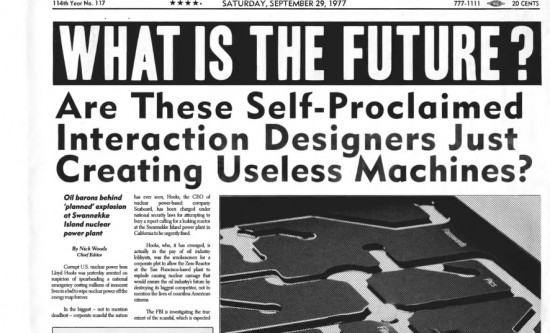

A kinetic installation becomes a hyper-sensorial landscape

Interactive kinetic installations are always incredible to see in action, but they become even more awesome when they’re part of a performance. As in the case of Infinite Delta, which is the result of Boris Chimp 504 + Alma D’ Arame’s artistic residency at Montemor-o-Novo in Portugal.

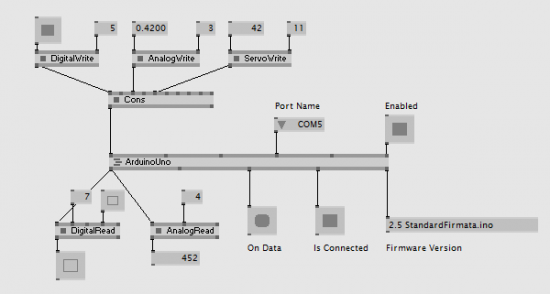

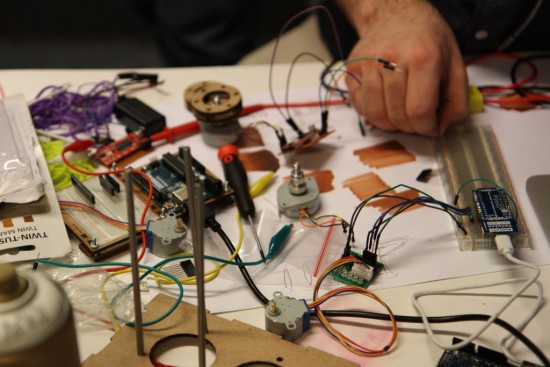

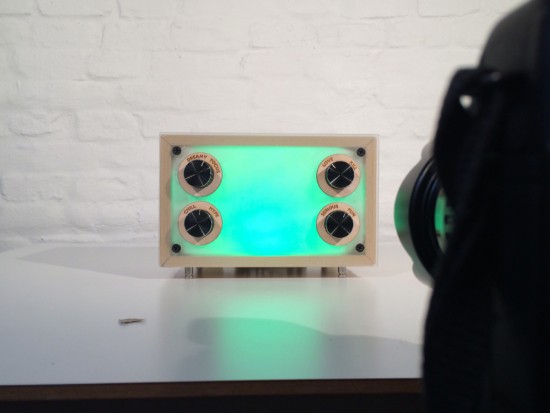

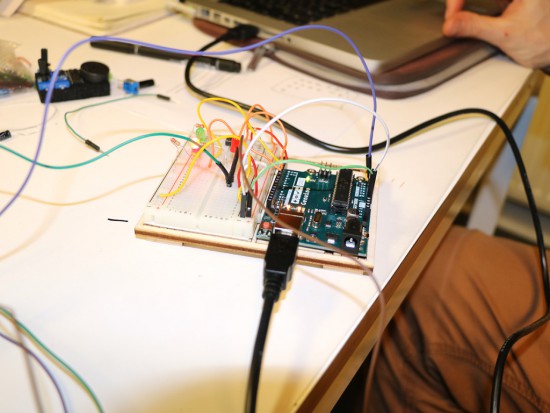

Using Arduino boards, they built a physical structure comprised of triangular planes that swing back and forth like a pendulum, controlled by a series of servo motors. Light is projected onto the moving structures, creating patterns that are then reflected onto a nearby wall. Infinite Delta also modifies its shape in response to the movement and sound of the audience.

In Euclidean geometry any three points, when non-collinear, form a unique triangle and determine a unique plane. Nevertheless, in quantum physics the string theory proposes that fundamental particles may also have similarities with a string. It also states that the universe is infinite and in it all matter is contained. In this “multiverse”, our universe would be just one of many parallel existent universes. What would happen then if we multiply triangles infinitely? Could or would we have access to those parallel universes?

The performers augmented the physical world by overlaying it into the digital world to produce a new alternative, magic and hyper-sensorial landscape.