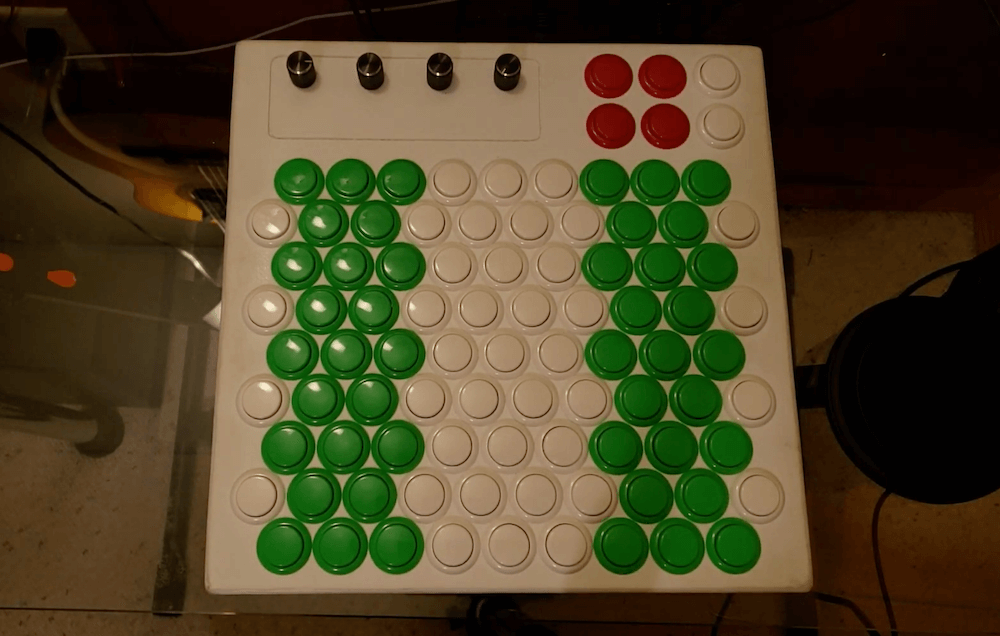

This acoustic sensing system localizes touch and senses force on everyday surfaces

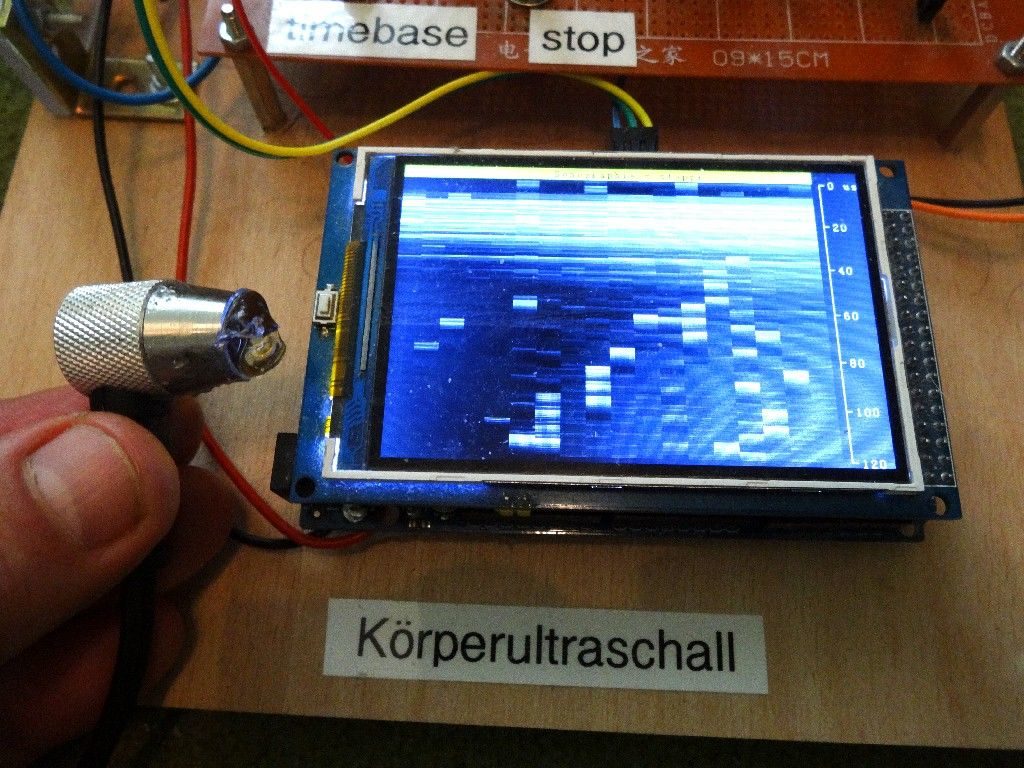

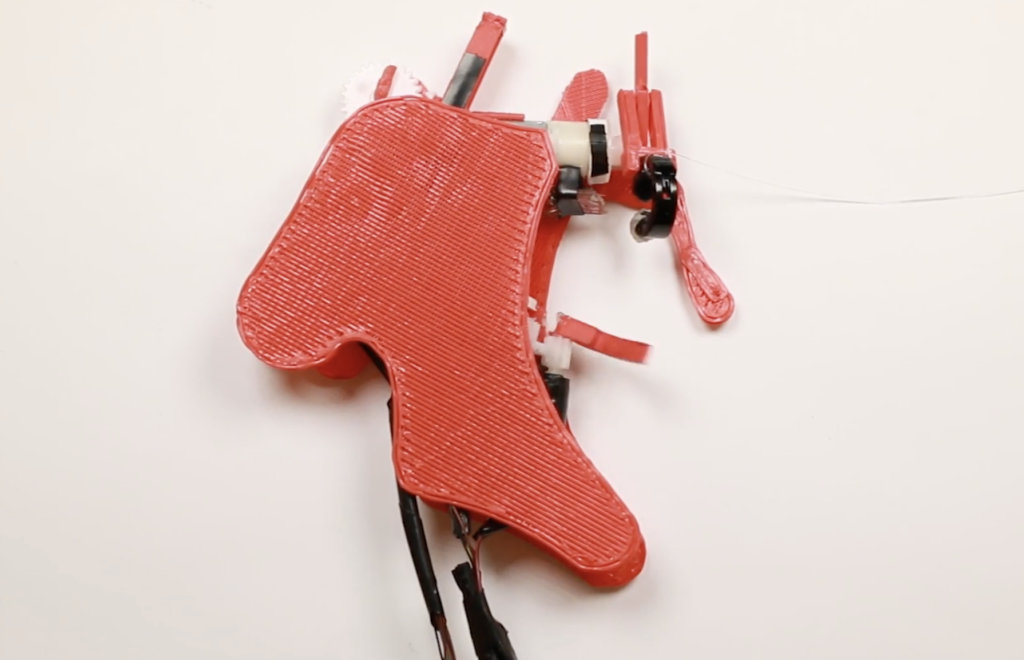

Researchers from the University of Auckland in New Zealand’s are exploring a new way to construct interactive touch surfaces using finger-mounted audio transducers.

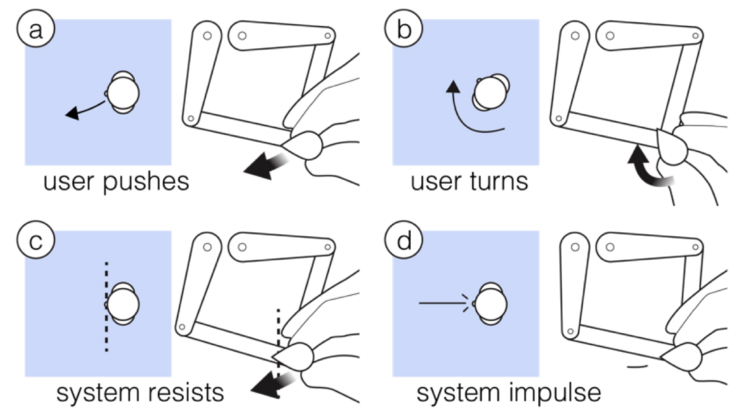

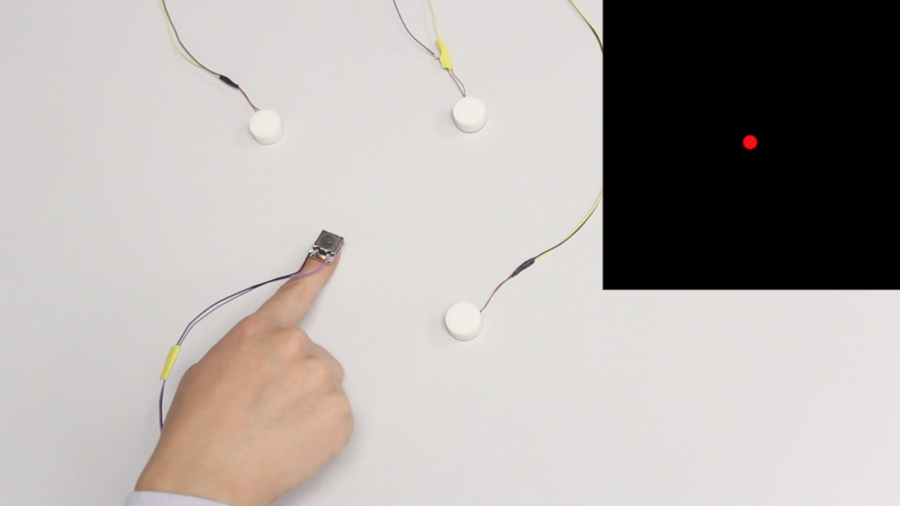

VersaTouch — which works on everyday surfaces — uses one or more receivers to measure sound waves emanating from the wearer’s “augmented” fingers, allowing it to calculate their positions and/or movements. The plug-and-play system can also sense force based on a changing audio signature and track individual digits by alternating each one’s sonic outputs.

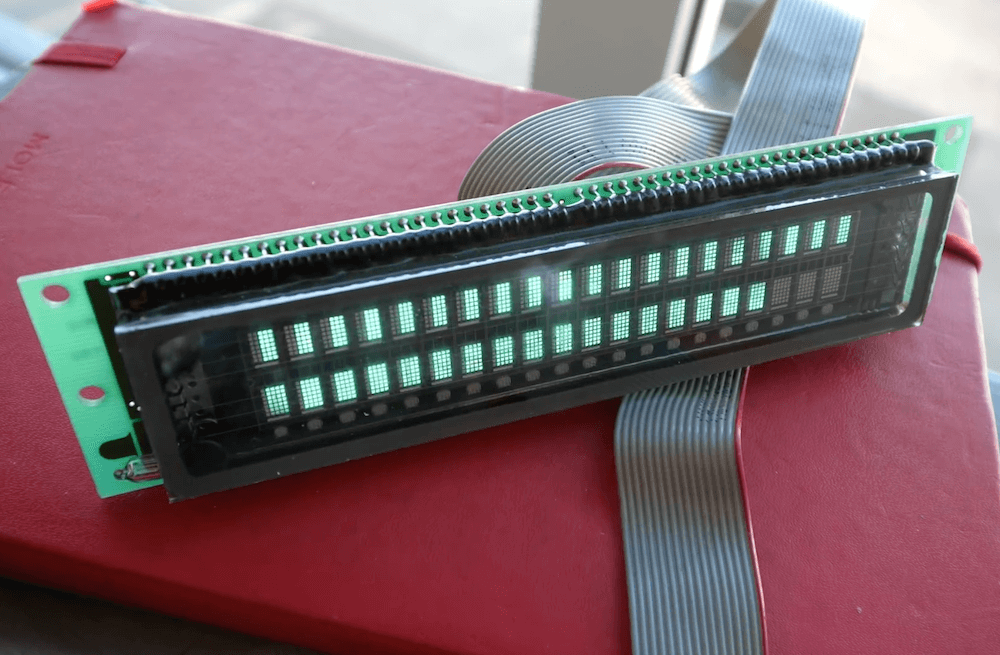

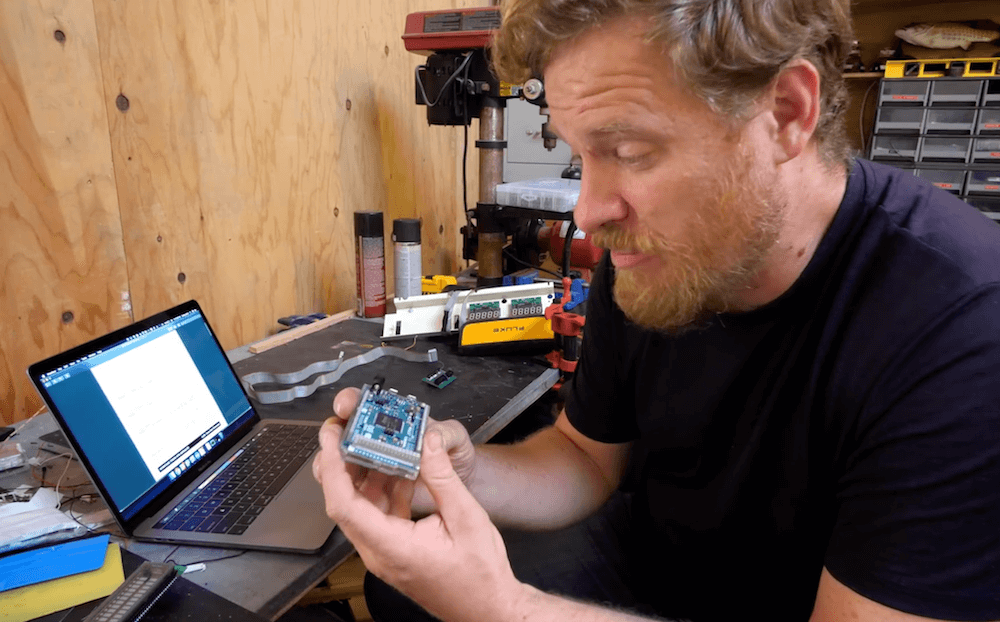

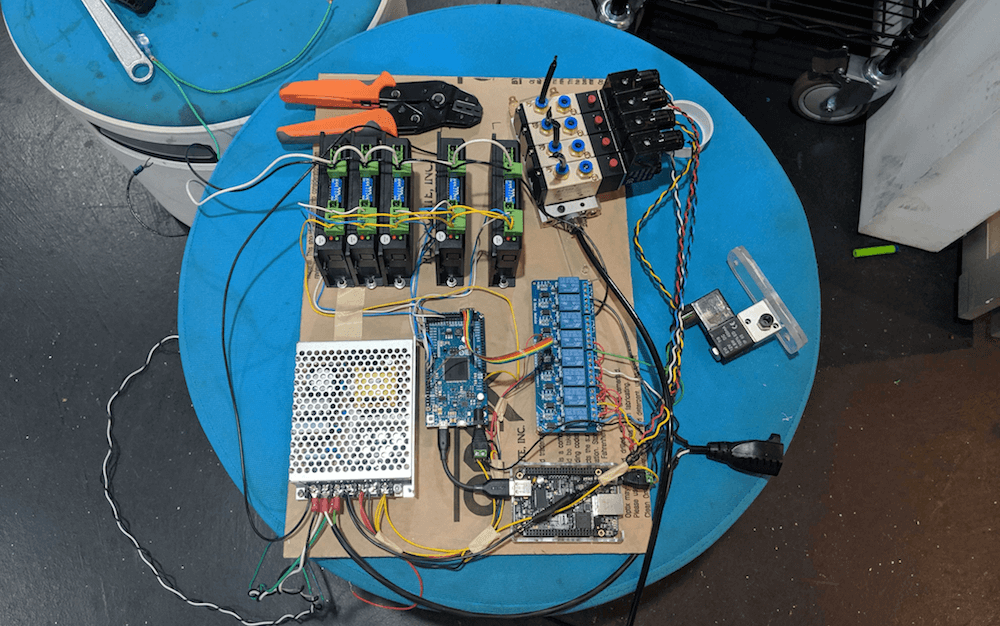

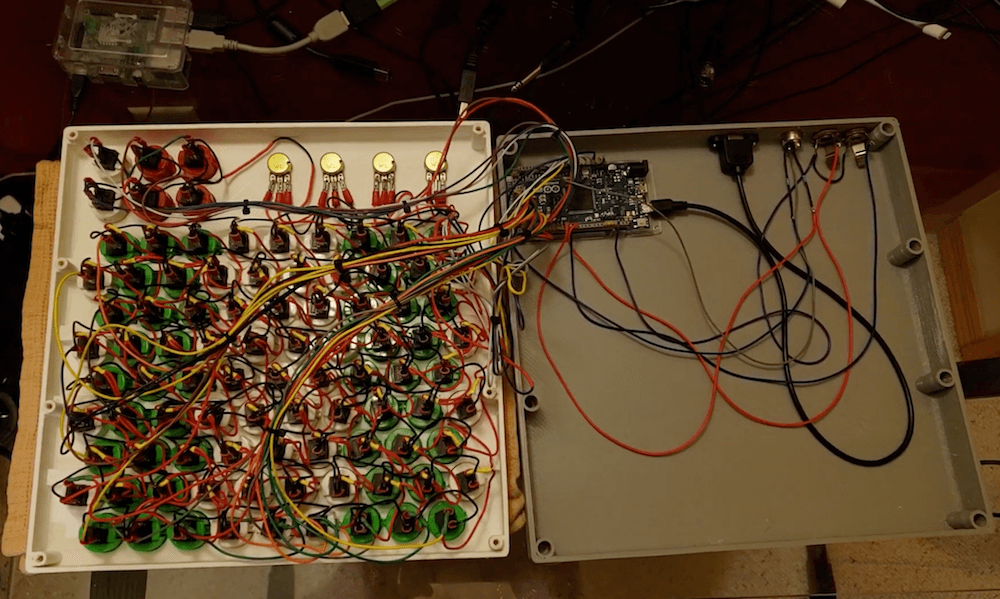

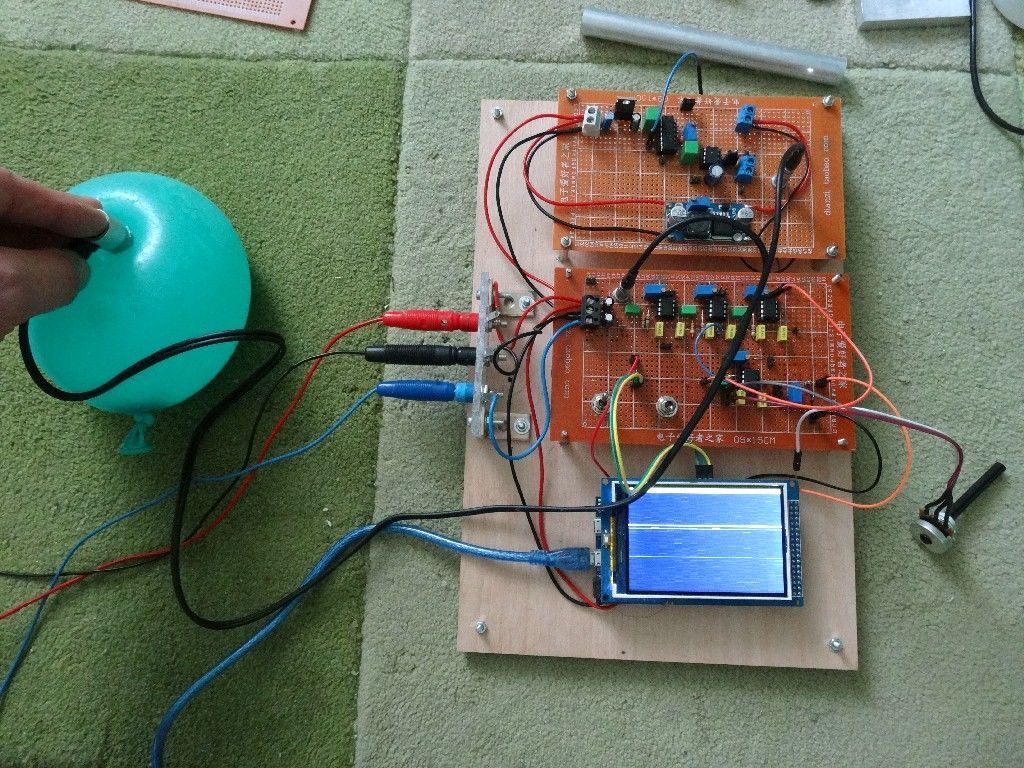

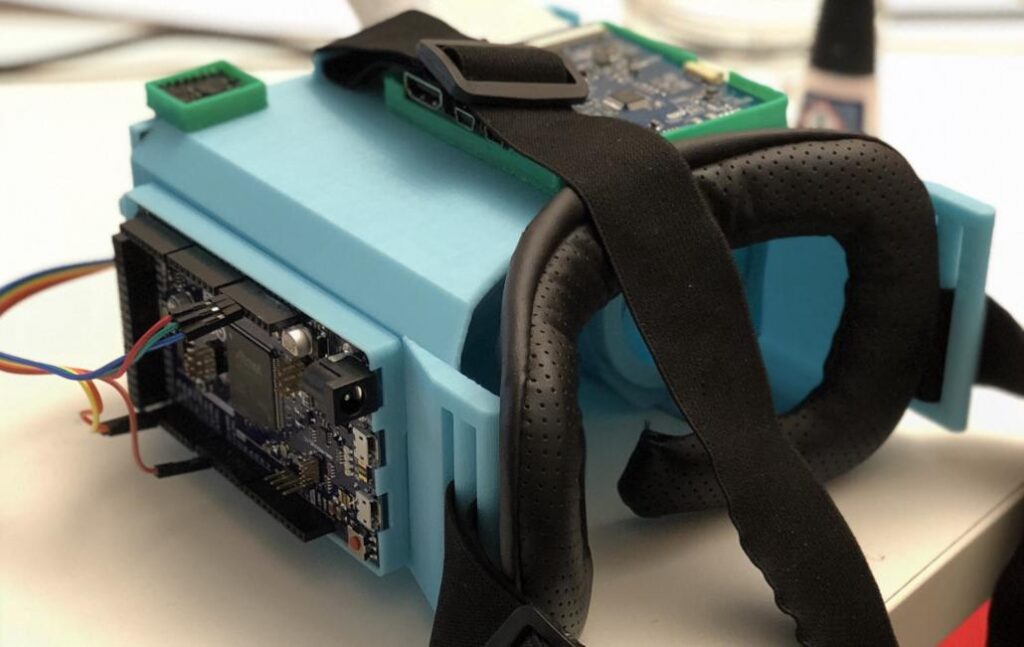

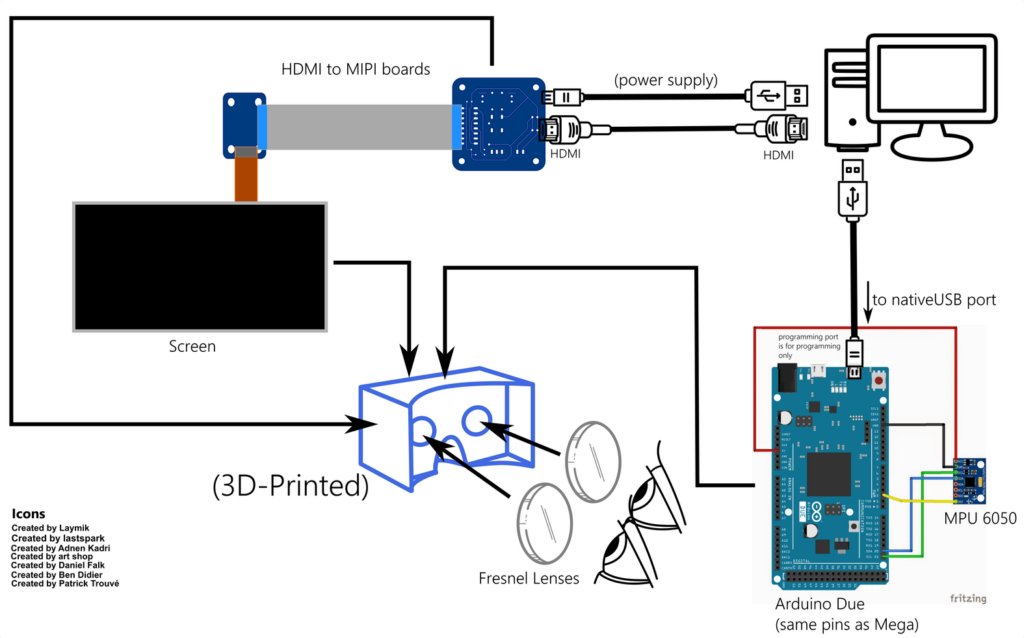

Importantly, VersaTouch can be configured without permanent modification to the newly interactive surface. The setup includes an Arduino Due to receive signals, a Teensy 3.6 to control the transducers, and a MacBook to process the data and calculate the touch positions with a Java program.

More information on the project can be found in the team’s research paper, and you can see it demonstrated in the video below.

VersaTouch is a portable, plug-and-play system that uses active acoustic sensing to track fine-grained touch locations as well as touch force of multiple fingers on everyday surfaces without having to permanently instrument them or do an extensive calibration. Our system is versatile in multiple aspects. First, with simple calibration, VersaTouch can be arranged in arbitrary layouts in order to fit into crowded surfaces while retaining its accuracy. Second, various modalities of touch input, such as distance and position, can be supported depending on the number of sensors used to suit the interaction scenario. Third, VersaTouch can sense multi-finger touch, touch force, as well as identify the touch source. Last, VersaTouch is capable of providing vibrotactile feedback to fingertips through the same actuators used for touch sensing.