Arduino Does SDI Video with FPGA Help

If you are running video around your home theater, you probably use HDMI. If you are running it in a professional studio, however, you are probably using SDI, Serial Digital Interface. [Chris Brown] looks at SDI and shows a cheap SDI signal generator for an Arduino.

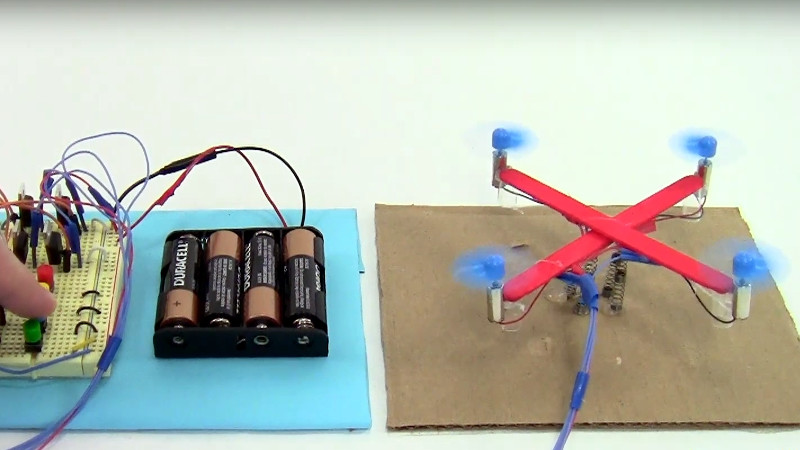

On the face of it, SDI isn’t that hard. In fact, [Chris] calls it “dead simple.” The problem is the bit rate which can be as high as 1.485 Gbps for the HD-SDI standard. Even for a super fast processor, this is a bit much, so [Chris] turned to the Arduino MKR Vidor 4000. Why? Because it has an FPGA onboard. Alas, the FPGA can’t do more than about 200 MHz, but that’s fast enough to drive an external Semtech GS296t2 serializer which is made to drive SDI signals.

The resulting project contains the Arduino, the serializer, a custom PCB, and both FPGA and microcontroller code. While the total cost of the project was a little under $200, that’s still better than the $350 to $2000 for a commercial SDI signal generator.

If you want to play along, the files are out on GitHub. We used the Vidor back in 2018 when it first came out. If you need a quick start on FPGAs, there’s always our boot camp.