In a new take on haptic navigation, makers Vojtech Pavlovsky and Tomas Kosicek have come up with a novel feedback system called the “Ariadne Headband.”

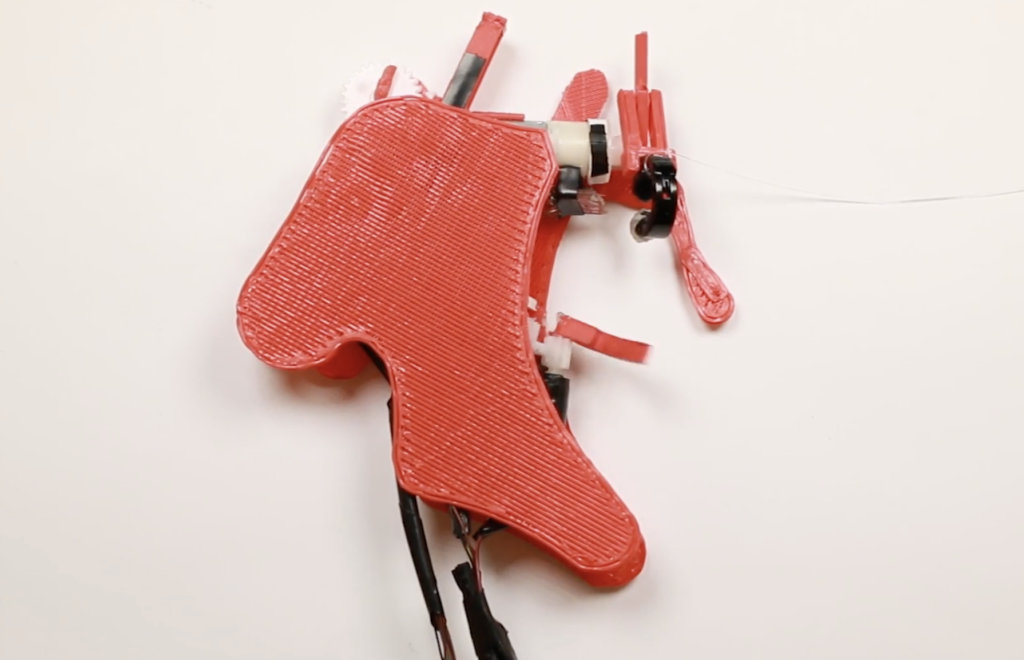

This device—envisioned for use by people with visual impairments, as well as those that simply want to get around without looking down at a phone while walking or biking—uses four vibrating motors arranged in a circle around the wearer’s head to indicate travel direction.

An Arduino Nano provides computing power for the setup, along with a compass module and a Bluetooth link to communicate with a companion smartphone app. The Ariadne Headband is currently a prototype, but this type of interface could one day be miniaturized to the point that it could be placed in a hat, helmet, or other everyday headgear.

Project Ariadne Headband is made out of two parts: headband and control app. The common usage flow is following. First, you open Ariadne Headband Android app. Using this app you connect via Bluetooth to your Headband. Next, the app will ask for you current GPS location. Then you open Google Maps integrated into our app and select your destination (place where you want to go).

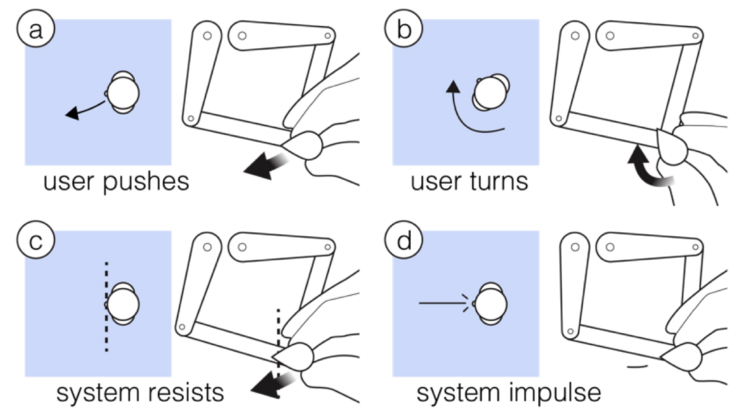

Our Android app will compute the geographical azimuth from your current location and chosen destination. When you are ready you start navigating by pressing a button that sends computed azimuth to the Headband you put on your head.

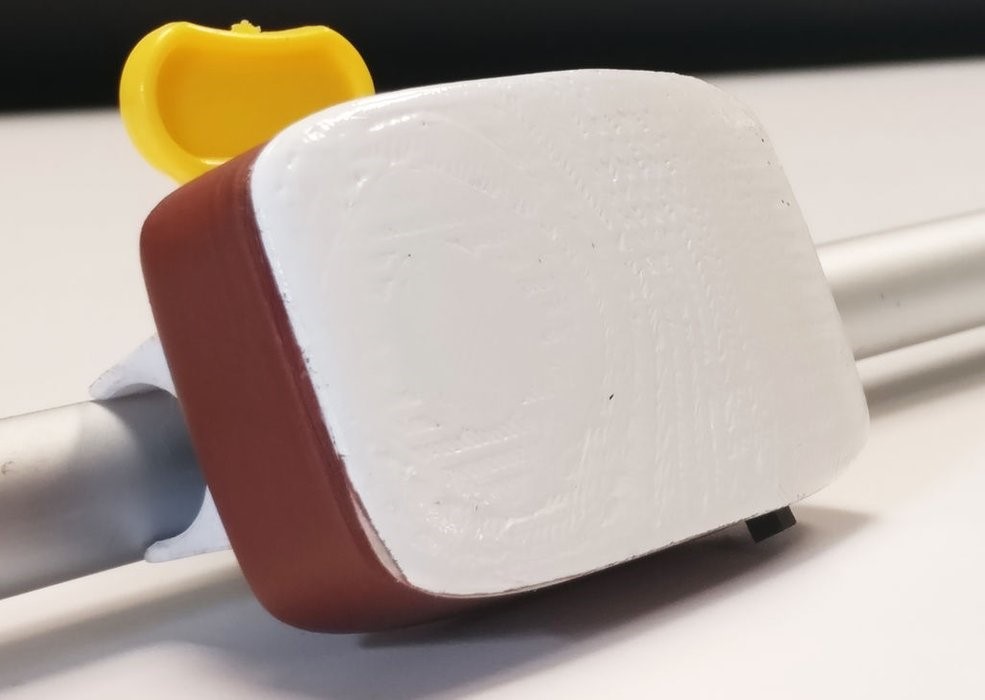

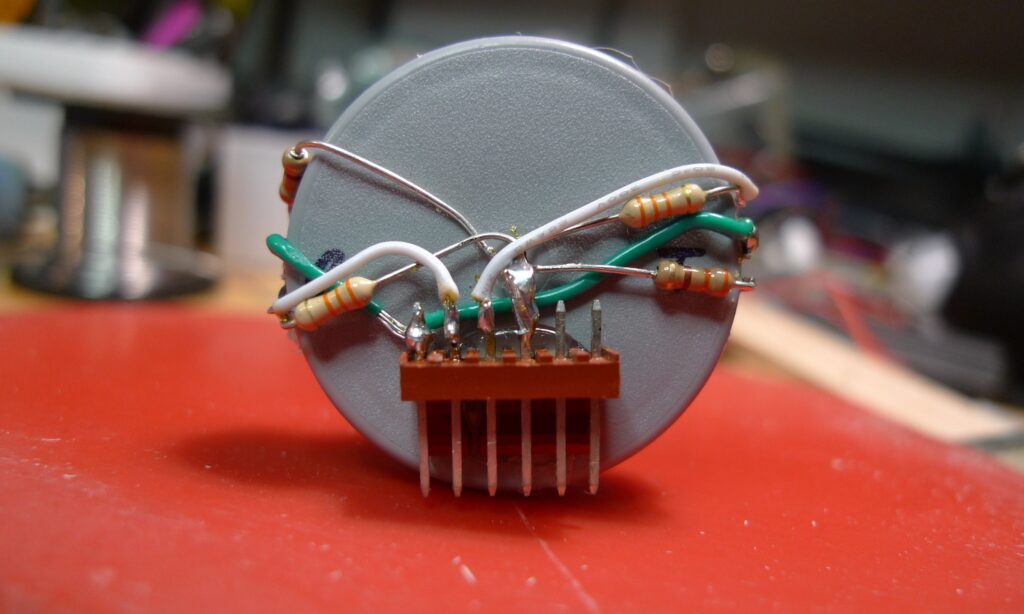

Headband consists of Arduino Nano board, GY-271 compass module, HC-06 Bluetooth module (we selected this module only for local availability and will switch to BLE soon) and 4 vibration motors. Compass module allows us to know current azimuth, that is where is the user looking. All components are placed into a small box on back of your head. Our aim in the future will be to make this as small as possible so you will not even feel it. It is also possible to place everything into a hat or helmet for example instead of rubber headband. We are using rubber headband because it is very easy to manipulate.

Vibration motors around your head are placed in set directions so they can signalize where you should head. Your heading is computed by taking your current azimuth and the azimuth sent from android app (that is where you are currently going and where you should go, respectively).