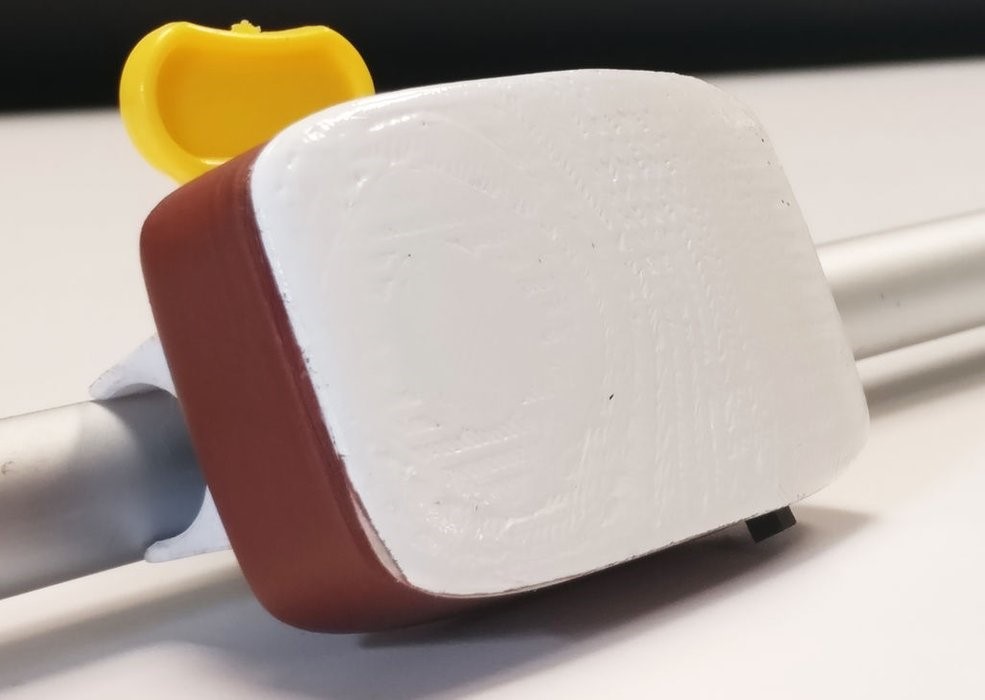

GesturePod is a clip-on smartphone interface for the visually impaired

Smartphones have become a part of our day-to-day lives, but for those with visual impairments, accessing one can be a challenge. This can be especially difficult if one is using a cane that must be put aside in order to interact with a phone.

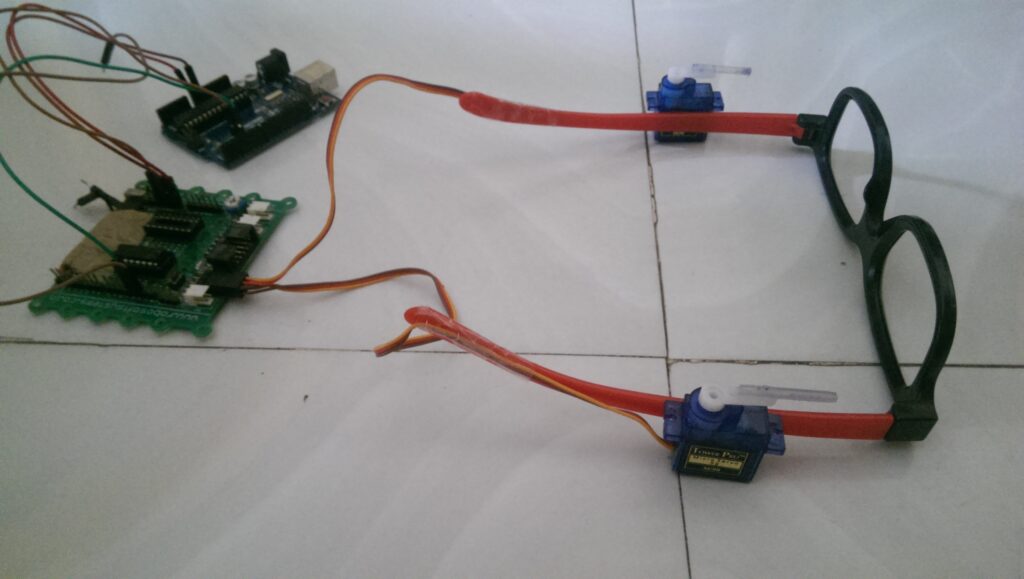

The GesturePod offers another interface alternative that actually attaches to the cane itself. This small unit is controlled by a MKR1000 and uses an IMU to sense hand gestures applied to the cane.

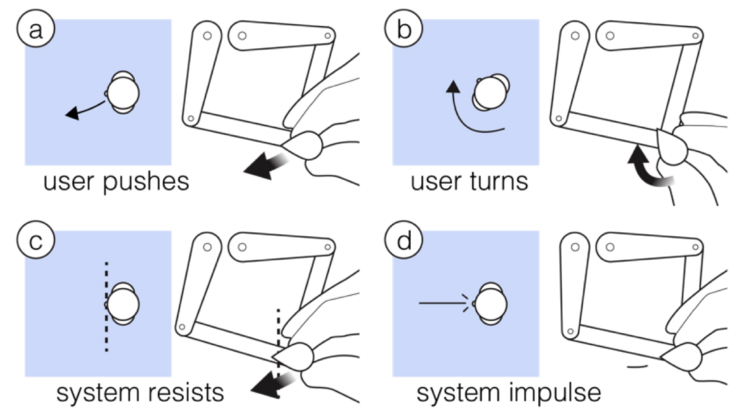

If a user, for instance, taps twice on the ground, a corresponding request is sent to the phone over Bluetooth, causing it to output the time audibly. Five gestures are currently proposed, which could expanded upon or modified for different functionality as needed.

People using white canes for navigation find it challenging to concurrently access devices such as smartphones. Build ing on prior research on abandonment of specialized devices, we explore a new touch free mode of interaction wherein a person with visual impairment can perform gestures on their existing white cane to trigger tasks on their smartphone. We present GesturePod, an easy-to-integrate device that clips on to any white cane, and detects gestures performed with the cane. With GesturePod, a user can perform common tasks on their smartphone without touch or even removing the phone from their pocket or bag. We discuss the challenges in build ing the device and our design choices. We propose a novel, efficient machine learning pipeline to train and deploy the gesture recognition model. Our in-lab study shows that Ges turePod achieves 92% gesture recognition accuracy and can help perform common smartphone tasks faster. Our in-wild study suggests that GesturePod is a promising tool to im prove smartphone access for people with VI, especially in constrained outdoor scenarios.