P-51 Cockpit Recreated with Help of Local Makerspace

It’s surprisingly easy to misjudge tips that come into the Hackaday tip line. After filtering out the omnipresent spam, a quick scan of tip titles will often form a quick impression that turns out to be completely wrong. Such was the case with a recent tip that seemed from the subject line to be a flight simulator cockpit. The mental picture I had was of a model cockpit hooked to Flight Simulator or some other off-the-shelf flying game, many of which we’ve seen over the years.

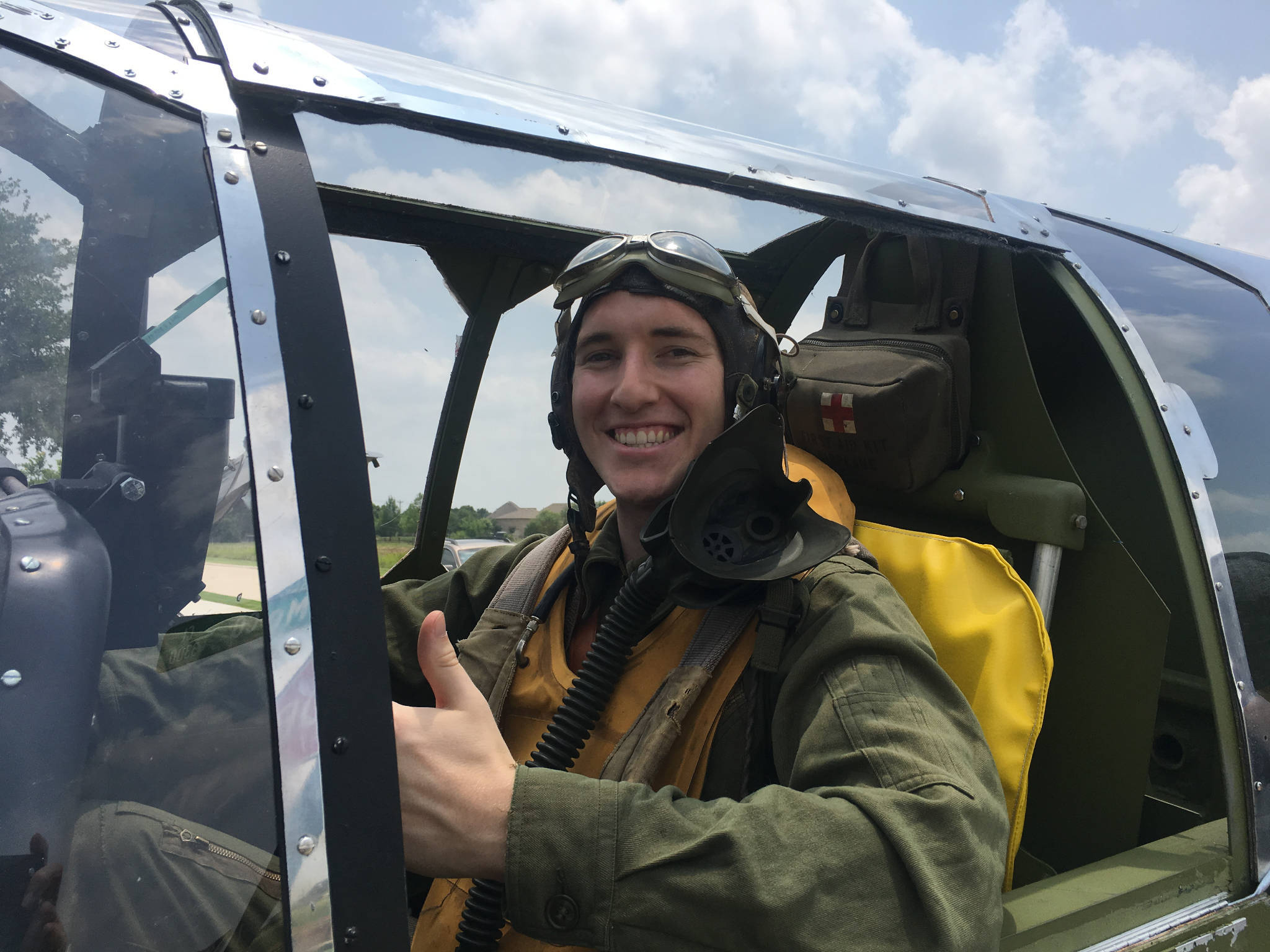

I couldn’t have been more wrong about the project that Grant Hobbs undertook. His cockpit simulator turned out to be so much more than what I thought, and after trading a few emails with him to get all the details, I felt like I had to share the series of hacks that led to the short video below and the story about how he somehow managed to build the set despite having no previous experience with the usual tools of the trade.

A Novel and a Film

Grant has been making short films for a while, mainly in collaboration with John Dwyer, an author of historical novels. Grant’s shorts are used as promos for John’s books, and nicely capture the period and settings of John’s novels. Most of these films required little in the way of special sets, relying instead on stock footage and vintage costumes to achieve their look and feel. John’s latest novel would change all that.

Called Mustang, the novel centers on a hotshot fighter pilot in WWII. Grant’s vision for the short to promote the book was inspired by the recent Christopher Nolan film Dunkirk, which featured intricate sequences filmed in the cockpit of a Spitfire. Granted wanted a similar look, and began arranging to use a real P-51 Mustang for filming. That presented immediate problems. First, there aren’t that many of the vintage aircraft left, and those that are still flying usually have anachronistic instruments in the cockpit, like GPS. Also, Grant wanted the instruments to respond as if the plane were airborne, and to have the shadows cast by the canopy into the cockpit suggest aerial maneuvers. Such an effect would be difficult to achieve with a plane stuck on a runway.

That’s when Grant realized that a gimballed cockpit simulator was needed. It could have a period-accurate dashboard, be positioned outdoors to take advantage of natural daylight and real backgrounds rather than CGI, and could be pitched, rolled and yawed to simulate flight. It would be perfect, and it would save the project. There was just one problem: he had no idea how to build it.

Helping Hands

Wisely, Grant turned to his local hackerspace, Dallas Maker Space, for help. There he found not only the tools he lacked, but kindred spirits with the necessary skills and the willingness to share them. They started working on the cockpit instrument panel, which ended up including a combination of actual flight hardware and mocked-up instruments. The fake instruments used steppers and an Arduino to drive the needles, which were controlled by a custom iPad app that was used to animate them live during filming. The real instruments, like the artificial horizon and turn-and-slip indicator, were powered by a vacuum pump and responded to the movements of the simulator on its gimbals.

Mounting this convincing panel into something was an entirely different undertaking. Grant relied heavily on the experience of DMS members to design a structure strong enough to support the actor and allow for the motion needed to create a convincing effect. The cockpit mockup, made from plasma-cut sheet metal and plywood, is mounted to a heavy-duty three-axis gimbal, including a massive bearing from a pallet jack for the yaw axis.

Grant had originally planned to place the mockup on a mountaintop for shooting, much as the Spitfire mockup from Dunkirk was placed on the edge of a cliff to give an unobstructed horizon to simulate flying over the English Channel. When that proved logistically challenging, he set up on an airport runway and used clever camera blocking to avoid shooting the horizon. Grips manually moved the simulator while Grant manipulated the fake instruments and filmed the results, which I think speak for themselves. If only the budget – and on-set safety – would have supported simulating the massive four-blade Mustang propeller, the illusion would have been complete.

I really enjoyed digging into this project and all the hacks that it entailed. Movie magic is as much about hacking as anything else, at least behind the cameras, and it’s good to see what’s possible with a limited budget. We recently featured a low-budget but high-style sci-fi movie set build, and we’ve gone in-depth with a playback designer for the Netflix series Lost in Space, both in these pages and as a Hack Chat.