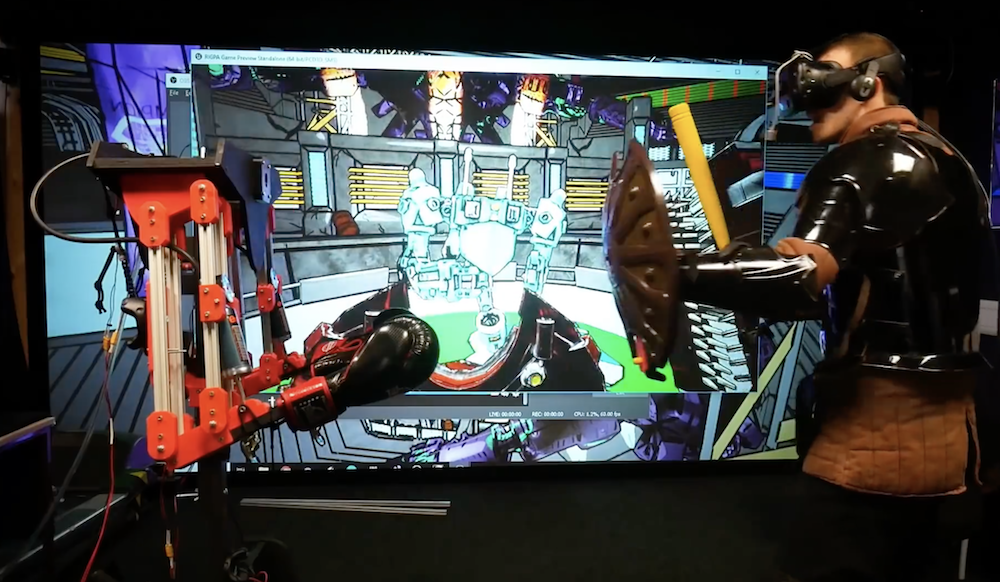

ElastImpact brings a bit more realism to VR

If you’ve ever used a VR system and thought what was really missing is the feeling of being hit in the face, then a team researchers at the National Taiwan University may hold just the solution.

ElastImpact takes the form of a head-mounted display with two impact drivers situated roughly parallel to one’s eyes for normal — straight-on — impacts, and another that rotates about the front of your face for side blows.

Each impact driver first stretches an elastic band using a gearmotor, then releases it with a micro servo when an impact is required. The system is controlled by an Arduino Mega, along with a pair of TB6612FNG motor drivers.

Impact is a common effect in both daily life and virtual reality (VR) experiences, e.g., being punched, hit or bumped. Impact force is instantly produced, which is distinct from other force feedback, e.g., push and pull. We propose ElastImpact to provide 2.5D instant impact on a head-mounted display (HMD) for realistic and versatile VR experiences. ElastImpact consists of three impact devices, also called impactors. Each impactor blocks an elastic band with a mechanical brake using a servo motor and extending it using a DC motor to store the impact power. When releasing the brake, it provides impact instantly. Two impactors are affixed on both sides of the head and connected with the HMD to provide the normal direction impact toward the face (i.e., 0.5D in z-axis). The other impactor is connected with a proxy collider in a barrel in front of the HMD and rotated by a DC motor in the tangential plane of the face to provide 2D impact (i.e., xy-plane). By performing a just-noticeable difference (JND) study, we realize users’ impact force perception distinguishability on the heads in the normal direction and tangential plane, separately. Based on the results, we combine normal and tangential impact as 2.5D impact, and performed a VR experience study to verify that the proposed 2.5D impact significantly enhances realism.