The Most Immersive Pinball Machine: Project Supernova

Over at [Truthlabs], a 30 year old pinball machine was diagnosed with a major flaw in its game design: It could only entertain one person at a time. [Dan] and his colleagues set out to change this, transforming the ol’ pinball legend “Firepower” into a spectacular, immersive gaming experience worthy of the 21st century.

A major limitation they wanted to overcome was screen size. A projector mounted to the ceiling should turn the entire wall behind the machine into a massive 15-foot playfield for anyone in the room to enjoy.

With so much space to fill, the team assembled a visual concept tailored to blend seamlessly with the original storyline of the arcade classic, studying the machine’s artwork and digging deep into the sci-fi archives. They then translated their ideas into 3D graphics utilizing Cinema4D and WebGL along with the usual designer’s toolbox. Lasers and explosions were added, ready to be triggered by game interactions on the machine.

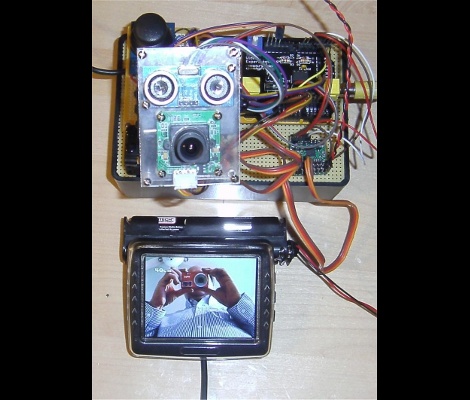

To hook the augmentation into the pinball machine’s own game progress, they elaborated an elegant solution, incorporating OpenCV and OCR, to read all five of the machine’s 7 segment displays from a single webcam. An Arduino inside the machine taps into the numerous mechanical switches and indicator lamps, keeping a Node.js server updated about pressed buttons, hits, the “Lange Change” and plunged balls.

The result is the impressive demonstration of both passion and skill you can see in the video below. We really like the custom shader effects. How could we ever play pinball without them?

Filed under: classic hacks, video hacks