The Science Kit is now available in Italian and Spanish editions.

Launched back in January 2019 in partnership with Google, the Arduino Science Kit Physics Lab is designed for middle school students (aged 11 to 14) to explore science. It is now available with Italian and Spanish course content, making it more accessible than ever.

“You discovered it yourself, so it was really fun doing that” UK Student from ARK Burlington Danes Academy.

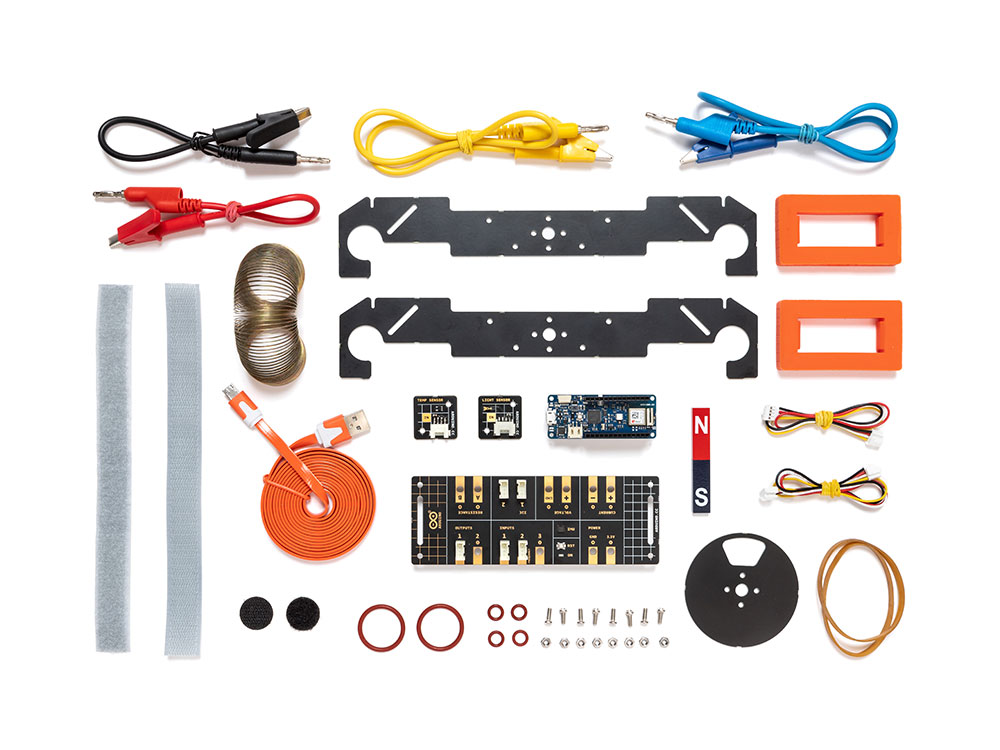

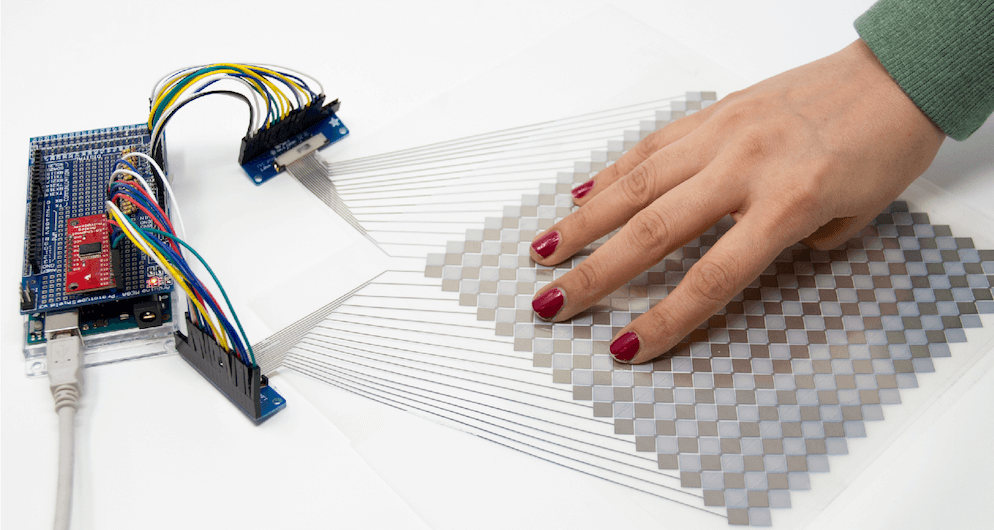

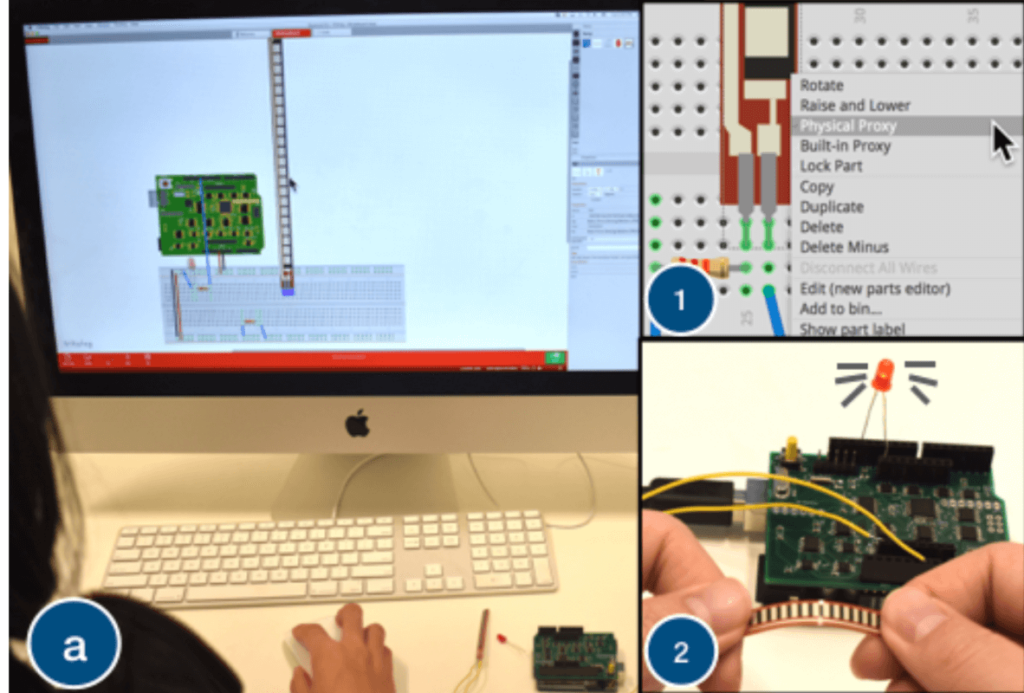

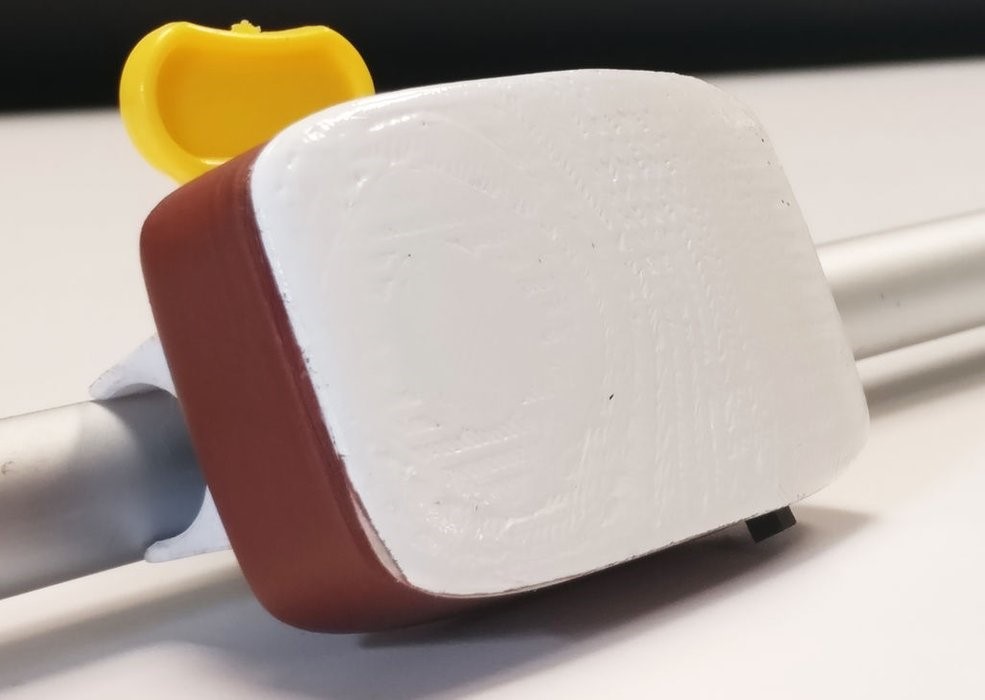

The Arduino Science Kit Physics Lab, requires no prior electronics knowledge. It features online course content and equipment to conduct and test the fundamental laws of traditional physics through nine exciting science projects inspired by popular fairground rides like the Gravitron and Pirate Ship. It is perfect for developing transferable skills such as critical thinking and problem solving through an inquiry-based learning approach. Students will experiment with forces, motion, magnetism, and conductivity – making their own hypothesis like real scientists, checking their assumptions, and logging data in real-time using Google’s Science Journal for Android.

“Makes it more accessible for students to do practical physics” – Graeme Wood, UK – Physics teacher, ARK Burlington Danes Academy.

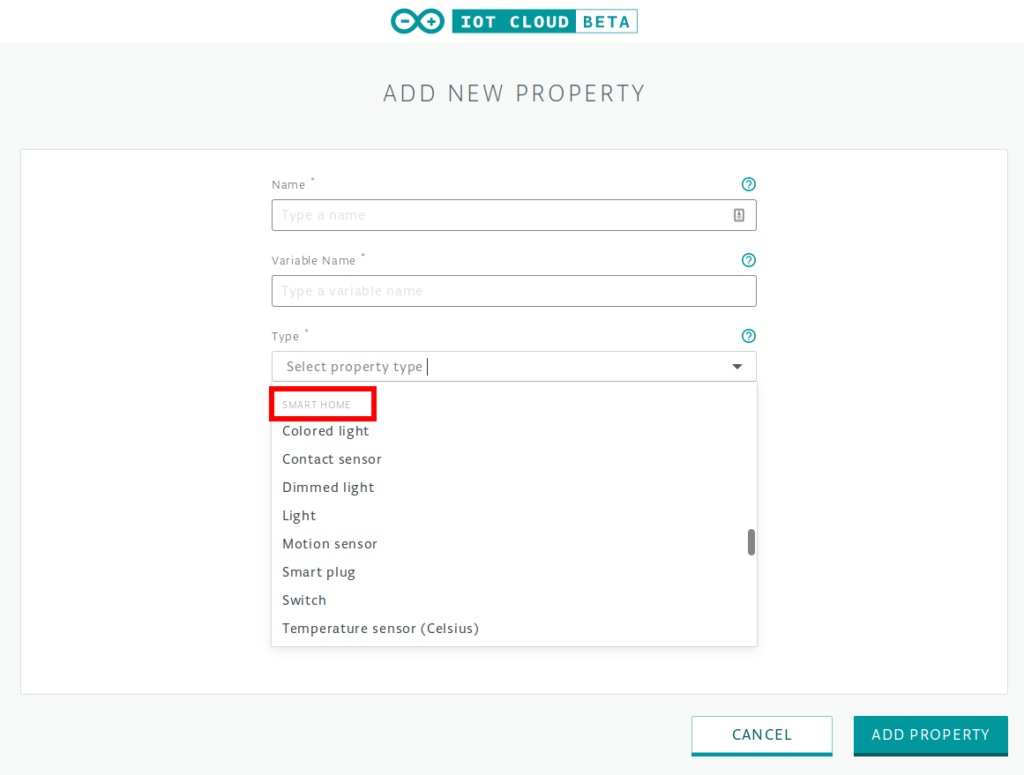

Incredibly easy to get started, students simply connect their Android mobile device to the board, build their project, and then use the onboard sensor and plug-and-play modules to simulate the rides’ dynamics. Data is transmitted from the experiment to the student’s mobile device via Bluetooth, where they can analyse and record their results in Google’s Science Journal App or worksheets.

The Arduino Education Science Kit Physics Lab isn’t confined to the classroom. In fact, students can use the kit outdoors to turn the playground into their very own fairground by applying the concepts they’ve learned to design and test their own rides. Based upon the Arduino MKR WiFi 1010, it is a fully portable lab including a range of sensors to measure light, temperature, motion, and magnetic fields, and much more… that come in a protective Gratnells classroom tray.

Arduino Education aims to assist educators by providing our programs, online course content and worksheets in as many languages as possible: not only relevant European languages, but for example, Arabic and Japanese are also planned for future release. Next in line for the Science Kit Physics Lab will be German, Hungarian and Portuguese so watch this space.

Ya están disponibles las ediciones de Science kit en Italiano y en Español

Lanzado en Enero de 2019 en colaboración con Google, Arduino Science Kit Physics Lab está diseñado para estudiantes de primaria ( de 11 a 14 años ) para aprender sobre ciencia. Ahora el contenido está disponible en Italiano y Español, haciéndolo aún más accesible que nunca.

“Lo vas descubriendo todo tú mismo, fue muy divertido” Estudiante de Reino Unido de ARK Burlington Danes Academy

Arduino Science Kit Physics Lab, no necesita conocimientos previos sobre electrónica. Incluye un curso online y equipamiento para llevar a cabo y probar las leyes fundamentales de la física tradicional a través de 9 proyectos científicos excitantes inspirados en atracciones populares de las ferias como el Gravitron y el Barco Pirata. Es perfecta para desarrollar habilidades como el pensamiento crítico y la resolución de problemas, a través de un aprendizaje mediante enseñanza reflexiva. Los alumnos experimentaran con fuerzas, movimientos, magnetismo y conductividad – haciendo sus propias hipótesis como científicos de verdad, probando sus suposiciones y recogiendo información en tiempo real usando la app de Google “Science Journal” para Android.

“Hace que la física práctica sea más accesible para los estudiantes” – Graeme Wood, UK – Profesor de física, ARK Burlington Danes Academy.

Increiblemente facil de empezar a usar, los alumnos simplemente tienen que conectar el dispositivo Android a la placa, montar el proyecto, y después usa los sensores que contiene la shield y los módulos plug-and-play para simular las atracciones. Los datos son transmitidos del experimento a el dispositivo móvil del alumno via Bluetooth, donde se puede ver y analizar el resultado obtenido en la app Science Journal de Google o en las fichas de los alumnos.

Arduino Education Science Kit Physics Lab no está pensado para usarse únicamente en clase. De hecho, los alumnos pueden usar el kit en exteriores para convertir su recreo en su propia feria de ciencia aplicando los conceptos que han aprendido, y así, diseñar y probar sus propias atracciones. Basado en el uso de Arduino MKR WiFi 1010 , que es un laboratorio portátil incluyendo un grupo de sensores para medir luminosidad, temperatura, movimiento, campos magnéticos y mucho más… todo esto en una caja Gratnells protectora.

Arduino Education apunta a apoyar a los profesores proporcionando nuestros programas, contenido online y las hojas del alumno en el mayor número de idiomas posible: no solo en los idiomas Europeos relevantes, por ejemplo, Árabe y Japonés están planeados para futuras actualizaciones. Lo siguientes idiomas para Science Kit Physics Lab serán Alemán, Húngaro y Portugués.

Da oggi Science Kit è disponibile anche in italiano e spagnolo.

Nel Gennaio 2019, in collaborazione con Google, Arduino Science Kit Physics Lab è stato lanciato sul mercato, per studenti di scuola media (tra 11 e 14 anni) e non solo, con l’obiettivo di esplorare la scienza, divertendosi.

Da oggi, i contenuti del corso sono disponibili in italiano e spagnolo, rendendolo ancora più accessibile.

“Sei tu che esperimenti in prima persona, ed è stato davvero divertente poterlo fare” ha commentato uno studente inglese dell’accademia di ARK Burlington Danes.

Arduino Science Kit Physics Lab non richiede nessuna conoscenza pregressa in elettronica. Possiede un corso online con tutti i contenuti e gli strumenti per poterli condurre e testare le leggi fondamentali della fisica classica attraverso nove esperimenti di scienza, ispirati dalle attrazioni più popolari nei parchi divertimento, come la nave pirata o il Gravitron. E’ un prodotto ideale per poter sviluppare competenze come l’abilità di saper risolvere problemi e il pensiero critico, attraverso un metodo di apprendimento basato sull’indagine pratica. Gli studenti sperimentano con le forze, il moto, il magnetismo e la conduttività; creando le loro ipotesi come veri scienziati, verificando le loro supposizioni, e prendendo nota dei dati in tempo reale usando il Google Science Journal per Android.

“Rende molto più accessibile agli studenti praticare la fisica” Graeme Wood, docente di fisica, presso ARK Burlington Danes Academy.

E’ incredibilmente facile da avviare, gli studenti connettono semplicemente il loro dispositivo mobile Android alla scheda, costruiscono il progetto, e dopo, usano i sensori installati e i moduli plug-and-play per simulare le dinamiche dei dati. I dati sono trasmessi dall’esperimento al dispositivo mobile via Bluetooth, dove possono analizzare, registrare i risultati nel loro Google Science Journal oppure nelle loro schede di lavoro.

Arduino Education Science Kit Physics Lab non si limita soltanto alla classe. Infatti, gli studenti possono usare il kit anche fuori all’aperto, trasformando il cortile della scuola in un luna-park, applicando i concetti che hanno imparato e progettando e testando le loro attrazioni. Basato su Arduino MKR WiFi 1010, è un laboratorio portatile completo, che include vari sensori per misurare la luce, la temperatura, il moto, i campi magnetici e molto altro…tutto incluso in una comoda e sicura scatola.

Arduino Education mira ad assistere gli insegnanti sostenendo i corsi, i contenuti online e le schede per gli studenti, in più’ lingue possibili: non soltanto lingue europee, ma anche per esempio arabo e giapponese sono in programma per il futuro. Prossimamente, Science Kit Physics Lab verrà rilasciato in tedesco, ungherese e portoghese, perciò continuate a seguirci per restare informati.