Arduino, Accelerometer, and TensorFlow Make You a Real-World Street Fighter

A question: if you’re controlling the classic video game Street Fighter with gestures, aren’t you just, you know, street fighting?

That’s a question [Charlie Gerard] is going to have to tackle should her AI gesture-recognition controller experiments take off. [Charlie] put together the game controller to learn more about the dark arts of machine learning in a fun and engaging way.

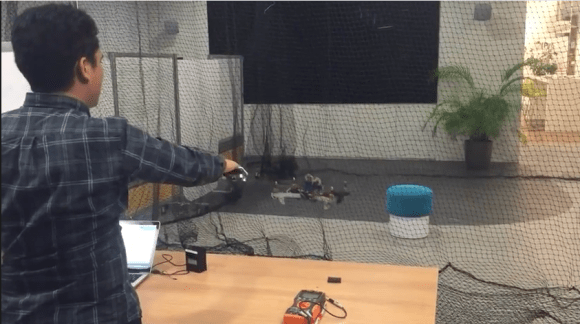

The controller consists of a battery-powered Arduino MKR1000 with WiFi and an MPU6050 accelerometer. Held in the hand, the controller streams accelerometer data to an external PC, capturing the characteristics of the motion. [Charlie] trained three different moves – a punch, an uppercut, and the dreaded Hadouken – and captured hundreds of examples of each. The raw data was massaged, converted to Tensors, and used to train a model for the three moves. Initial tests seem to work well. [Charlie] also made an online version that captures motion from your smartphone. The demo is explained in the video below; sadly, we couldn’t get more than three Hadoukens in before crashing it.

With most machine learning project seeming to concentrate on telling cats from dogs, this is a refreshing change. We’re seeing lots of offbeat machine learning projects these days, from cryptocurrency wallet attacks to a semi-creepy workout-monitoring gym camera.