Creating A Sonic Landscape With Glitching CD Player

CDs were a great advancement in audio quality when they were first put on the market. There’s no vinyl-style degradation of the medium if it’s played over and over, and there’s no risk of turning them into a giant pile of ribbon while rewinding like a cassette tape. The one downside was that if you were to take them on the move you needed special hardware and software to prevent the inevitable skipping. If you look at the skipping not as a downside, though, but as a way to produce interesting music, you might end up with a pretty unique piece of hardware.

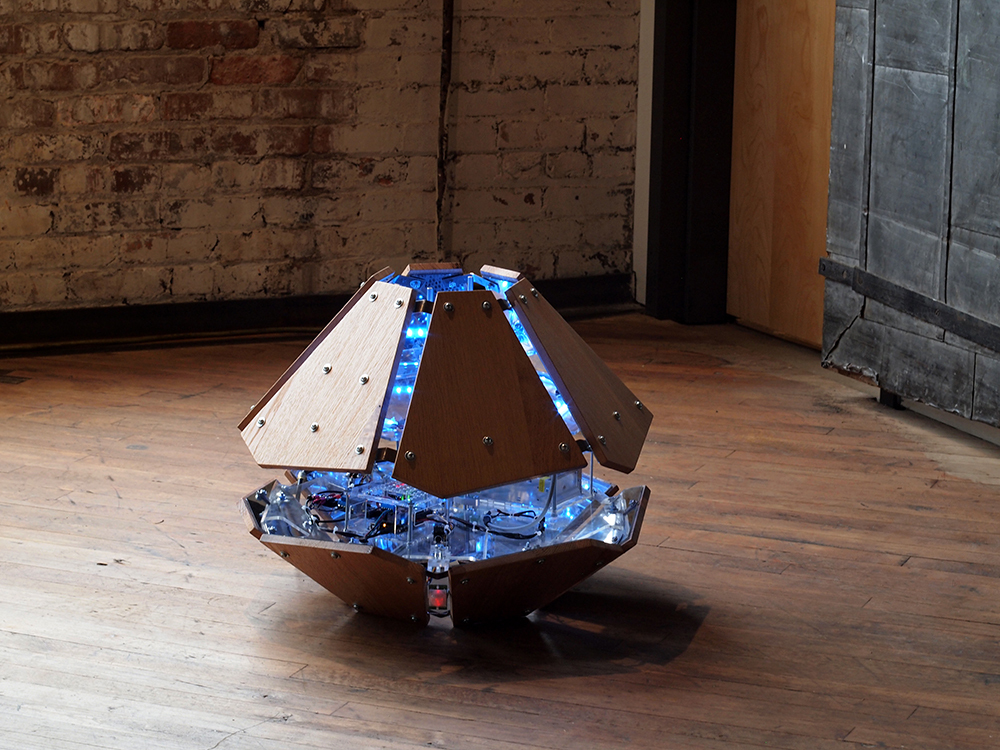

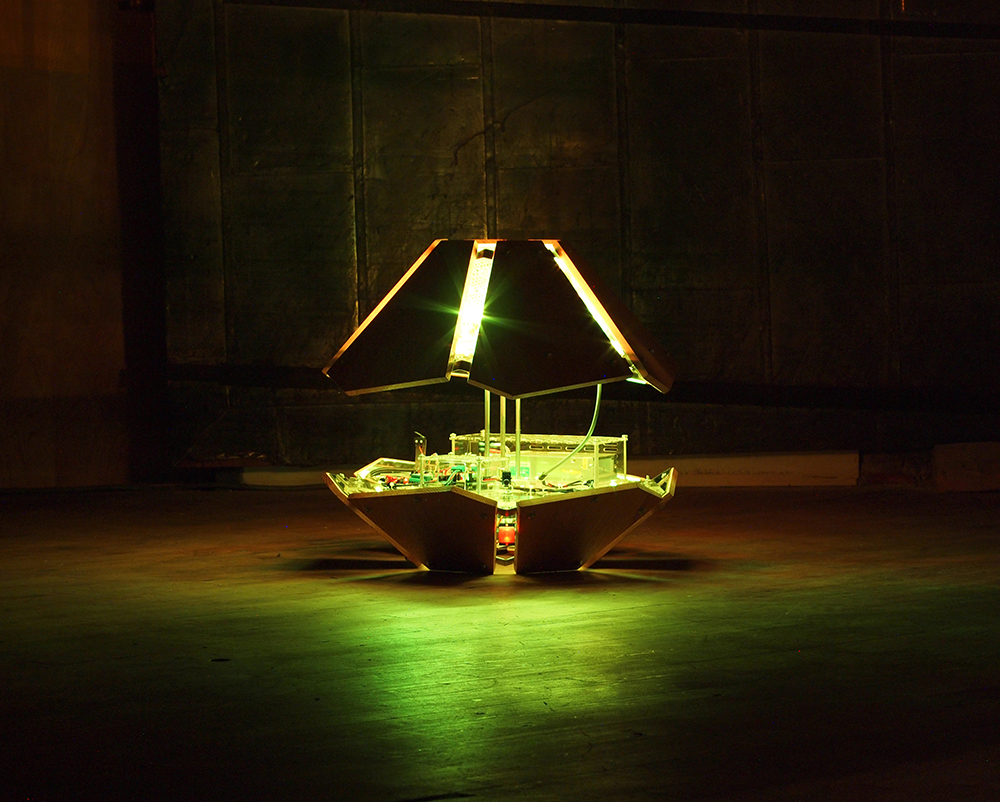

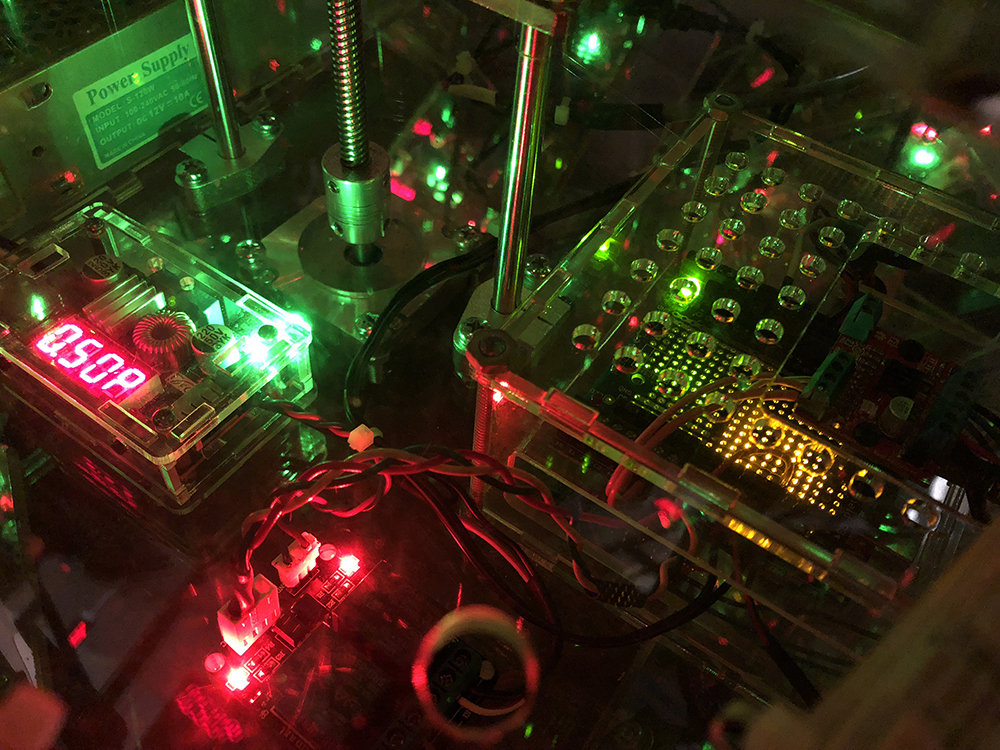

[Dmitry] is known for his interesting art installations, and the latest one uses parts from three 1988 Sony D2 CD players that have been reassembled in order to take advantage of a skipping and glitching CD. The modified equipment is able to play during pause or rewind thanks to a processor modification, and can also change the rotational speed of the disc. There are other pieces of hardware included for more fine control of glitching and skipping of the audio being read off of the CD.

The new device functions as a working musical instrument, although [Dmitry] says that it is more useful for deconstructing the information stored on the disc, and exploring the medium itself. Of course if you have enough motivation, you can find sounds from almost anywhere on (or in) the planet too.