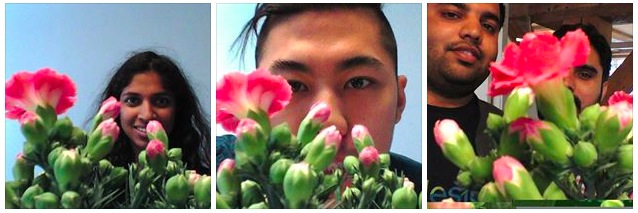

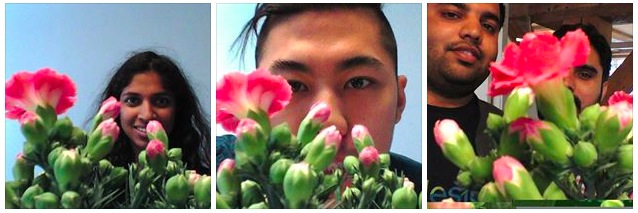

Smile! This plant wants to take a selfie with you

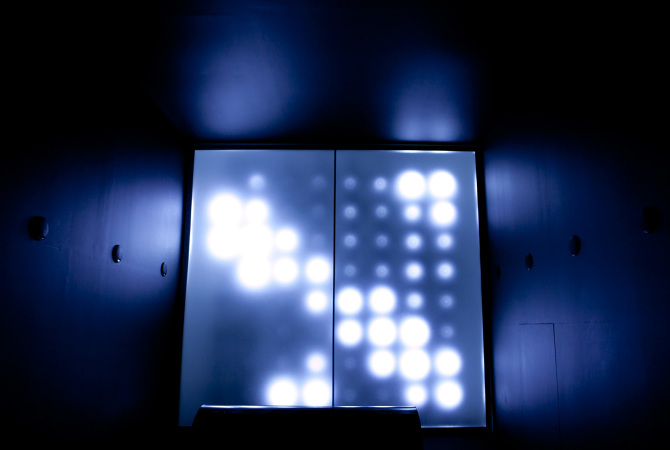

The Selfie Plant is an interactive installation taking pictures of itself using Arduino Yún, Facebook Graph APIs and then uploads them to Facebook. It was developed by a group of students at the Copenhagen Institute of Interaction Design during “The secret life of objects” course held also by Arduino.cc team by Joshua Noble and Simone Rebaudengo. The final prototype was on display at the class exhibition, to observe the interaction of the audience with it, and the results are on Facebook.

The Selfie Plant is an attempt to provoke some thoughts above genre of expression. The Selfie Plant expresses itself in the form of nice-looking selfies, which it clicks according to its mood, weather or occasion. It mimics human behaviour, by giving it’s best pose and adjusting the camera angle to take the perfect selfie.

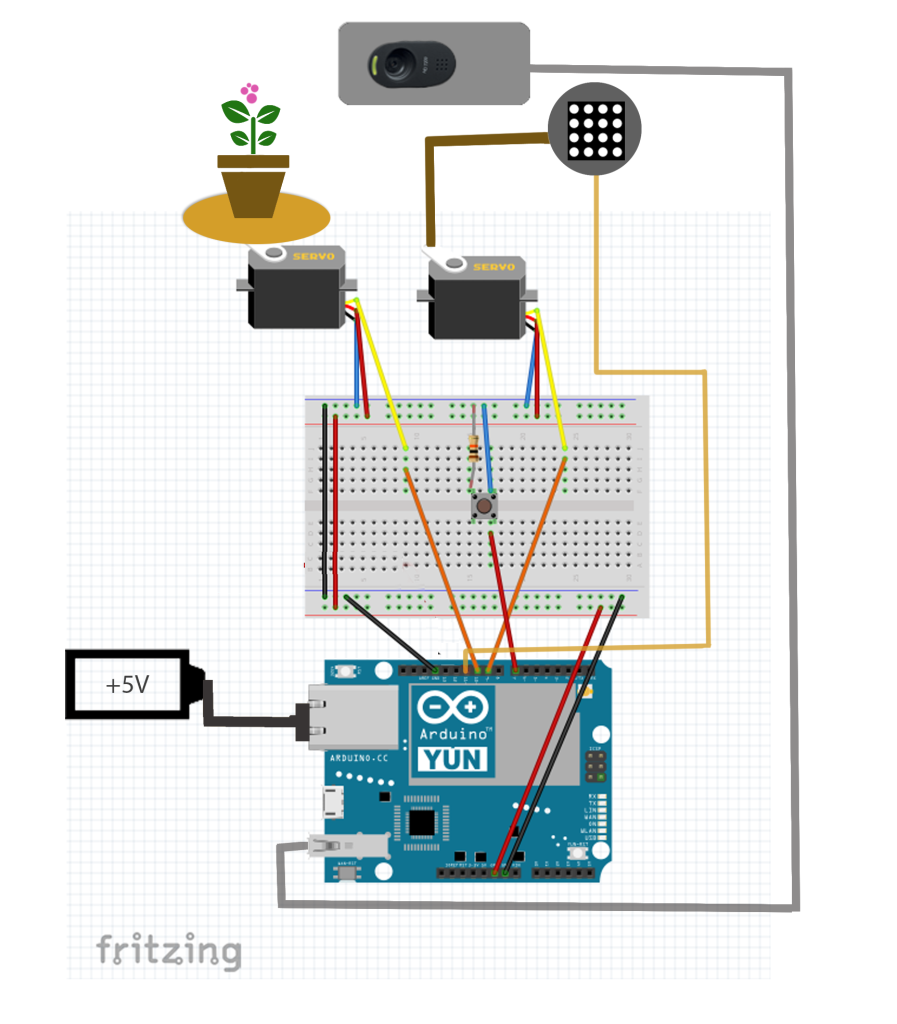

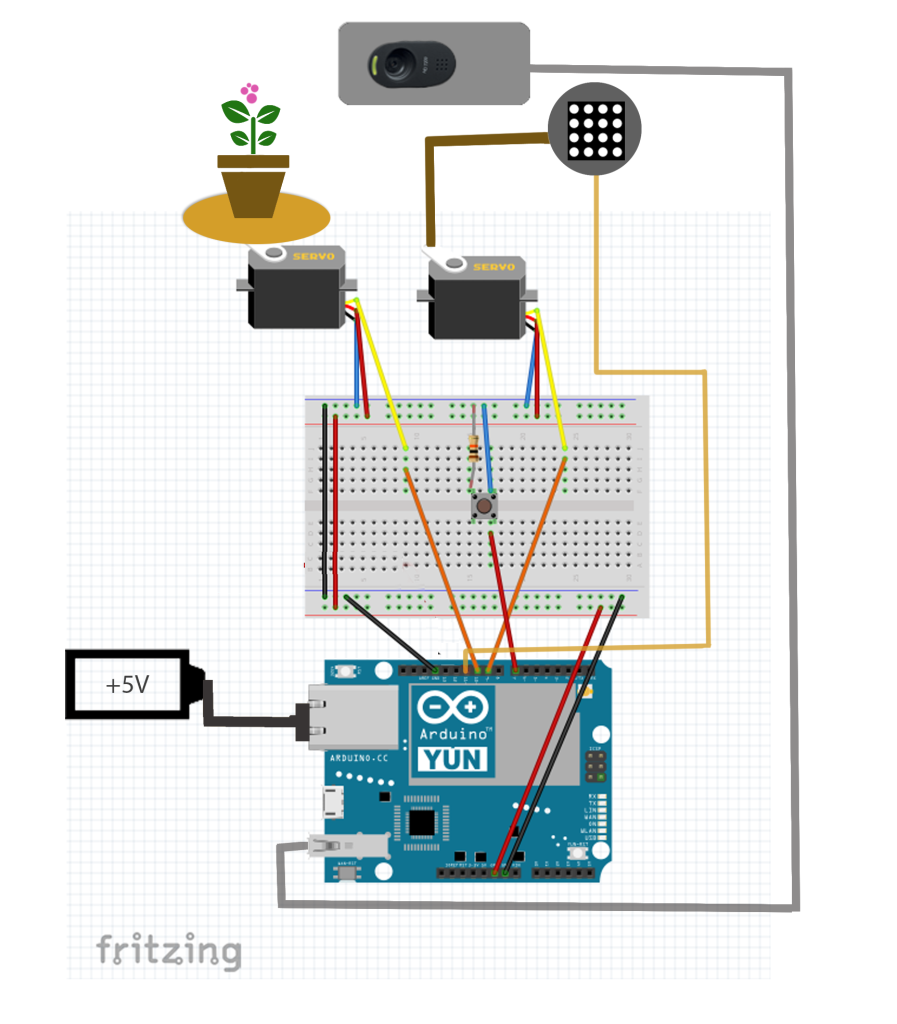

In the documentation on Github you can find all the details of the project composed by an Arduino Yún, controlling 2 servo motors and adjusting the positions of the plant and the camera stick; a python script (facebook.py) which communicates with Facebook’s graph API to post the captured photos on plant’s Facebook profile. In addition you’ll need also a LED Matrix, a Bread Board and 5 Volt Battery.

Here’s a preview of the diagram: