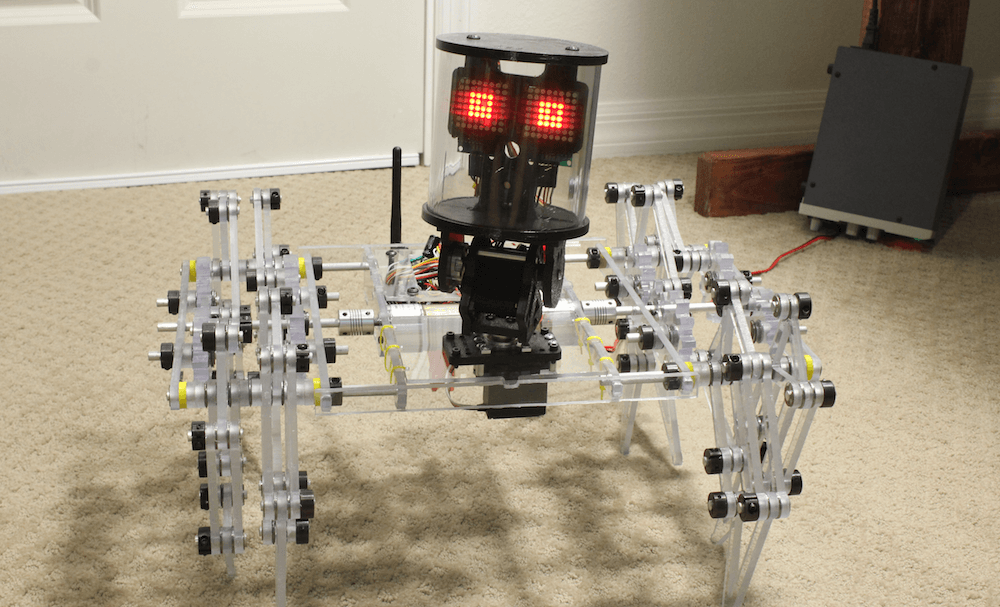

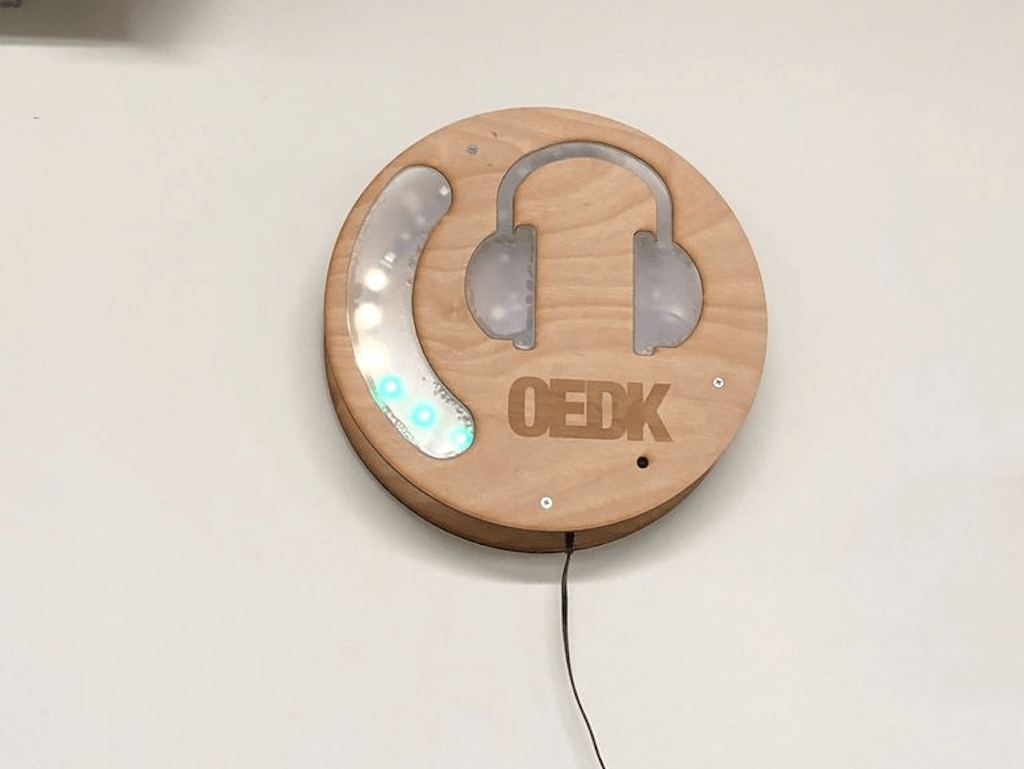

This display reminds makerspace members to wear hearing protection

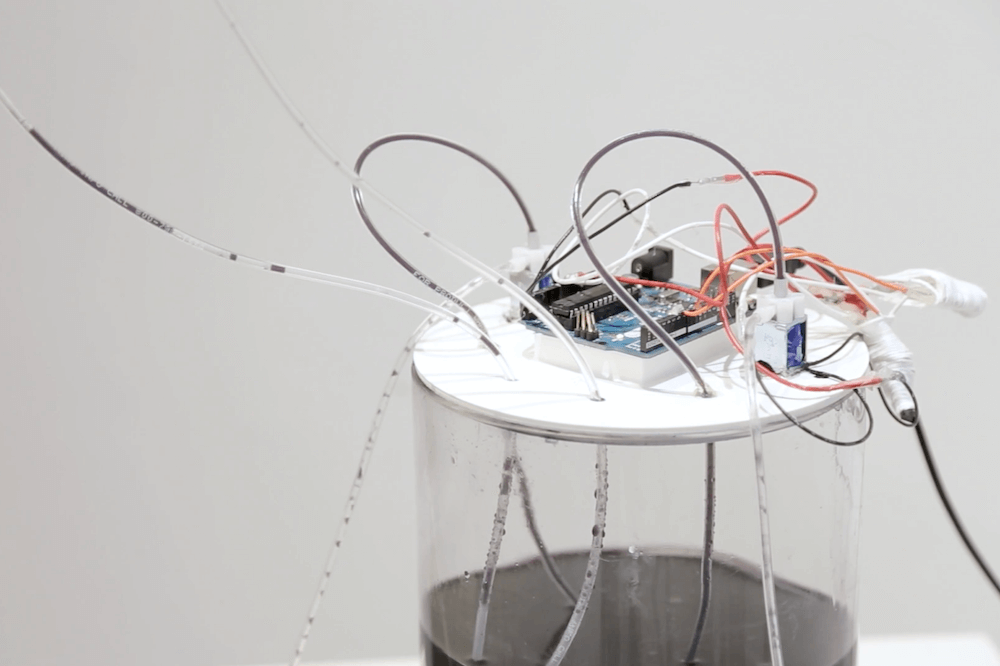

While members of the Oshman Engineering Design Kitchen makerspace at Rice University generally do a good job with wearing proper eye protection and gloves, hearing safety has lagged behind. In order to make it obvious when students need to apply the protective equipment, the “Ring the Decibels” team there has come up with an excellent sound display, laser cut out of wood and acrylic.

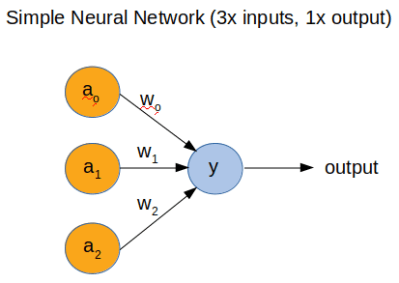

Their system uses an analog sound sensor to detect noise passing data on to an Arduino Uno. In response, the Uno controls two LED strips, one of which indicates levels in the form of a VU meter, while the second strip flashes red under an acrylic headphones cutout when dangerous levels are present.

Build details are available here, and you can check out the demo below to see how it works!