The Sensor Array That Grew Into a Robot Cat

Human brains evolved to pay extra attention to anything that resembles a face. (Scientific term: “facial pareidolia”) [Rongzhong Li] built a robot sensor array with multiple emitters and receivers augmenting a Raspberry Pi camera in the center. When he looked at his sensor array, he saw the face of a cat looking back at him. This started his years-long Petoi OpenCat project to build a feline-inspired body to go with the face.

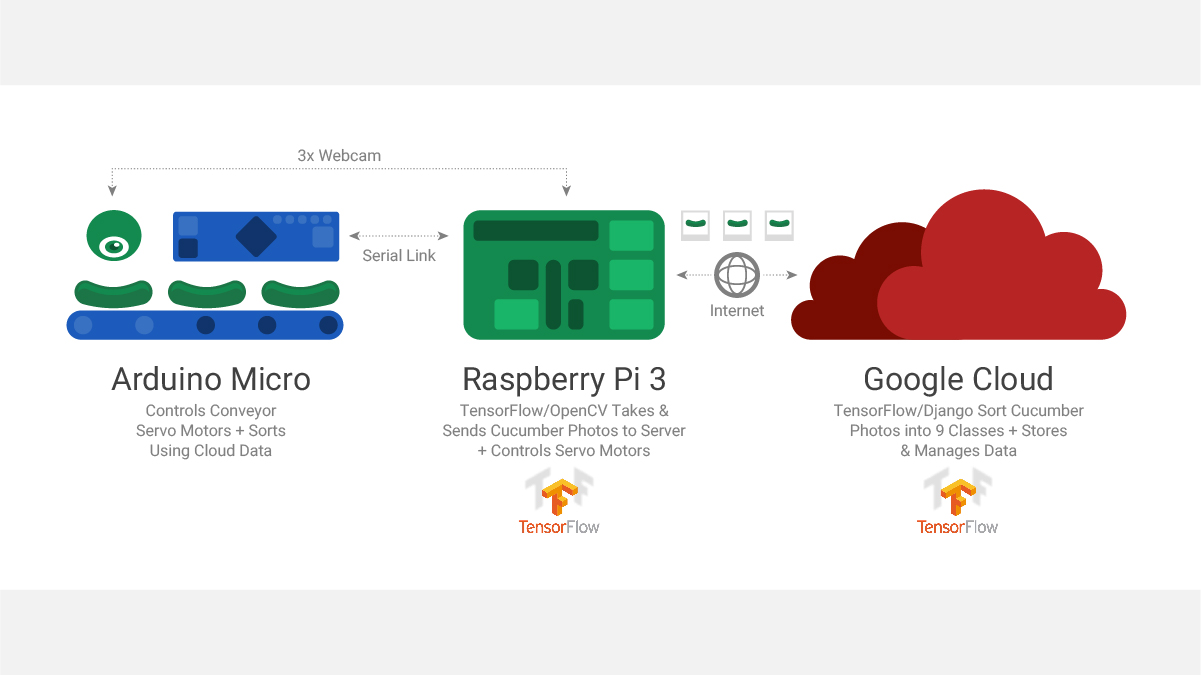

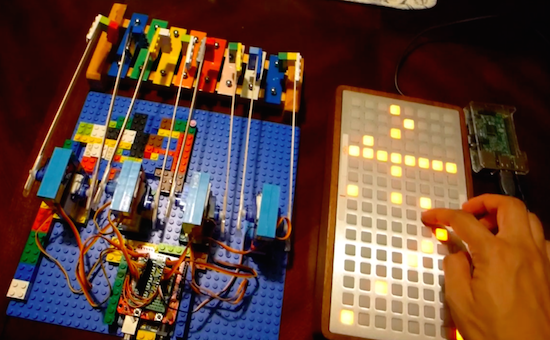

While the name of the project signals [Rhongzhong]’s eventual intention, he has yet to release project details to the open-source community. But by reading his project page and scrutinizing his YouTube videos (a recent one is embedded below) we can decipher some details. Motion comes via hobby remote-control servos orchestrated by an Arduino. Higher-level functions such as awareness of environment and Alexa integration are handled by a Raspberry Pi 3.

The secret (for now) sauce are the mechanical parts that tie them all together. From impact-absorption spring integrated into the upper leg to how its wrists/ankles articulate. [Rongzhong] believes the current iteration is far too difficult to build and he wants to simplify construction before release. And while we don’t have much information on the software, the sensor array that started it all implies some level of sensor fusion capabilities.

We’ve seen lots of robotic pets, and for some reason there have been far more robotic dogs than cats. Inspiration can come from Boston Dynamics, from Dr. Who, or from… Halloween? We think the lack of cat representation is a missed opportunity for robotic pets. After all, if a robot cat’s voice recognition module fails and a command is ignored… that’s not a bug, it’s a feature of being a cat.

[via TheNextWeb]