Neural Network (Part 3): The Layer

The Layer

The purpose of a layer is mainly to group and manage neurons that are functionally similar, and provide a means to effectively control the flow of information through the network (forwards and backwards). The forward pass is when you convert input values into output values, the backwards pass is only done during network training.

In my neural network, the Layer is an essential building block of the neural network. They can work individually or as a team. Let us just focus on one layer for now

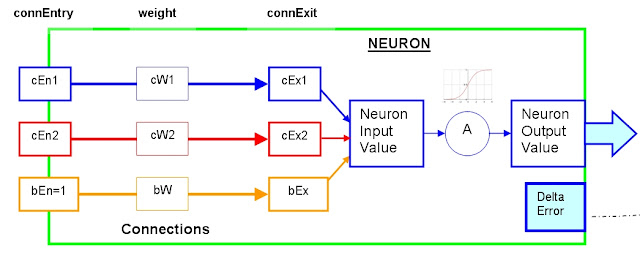

This is what a layer with two neurons looks like in my neural network:

As you can see from the picture above, I tend to treat each neuron as an individual.

Both neurons have the same connEntry values (cEn11 = cEn21) and (cEn12 = cEn22), which stem from the layerINPUTs. Other than that, the rest is different. Which means that Neuron1 could produce an output value close to 1, and at the same time Neuron2 could produce an output close to 0, even though they have the same connEntry values at the start.

The following section will describe how the layerINPUTs get converted to actualOUTPUTs.

Step1: Populate the layerINPUTs:

layerINPUTs are just a placeholder for values that have been fed into the neural network, or come from a previous layer within the same neural network. If this is the first layer in the network, then the layerINPUTs would be the Neural Network's input values (eg Sensor data). If this is the 2nd layer in the network, then the layerINPUTs would equal the actualOUTPUTs of Layer One

Both neurons have the same connEntry values (cEn11 = cEn21) and (cEn12 = cEn22), which stem from the layerINPUTs. Other than that, the rest is different. Which means that Neuron1 could produce an output value close to 1, and at the same time Neuron2 could produce an output close to 0, even though they have the same connEntry values at the start.

The following section will describe how the layerINPUTs get converted to actualOUTPUTs.

Step1: Populate the layerINPUTs:

layerINPUTs are just a placeholder for values that have been fed into the neural network, or come from a previous layer within the same neural network. If this is the first layer in the network, then the layerINPUTs would be the Neural Network's input values (eg Sensor data). If this is the 2nd layer in the network, then the layerINPUTs would equal the actualOUTPUTs of Layer One

Step2: Send each layerINPUT to the neuron's connection in the layer.

You will notice that every neuron in the same layer will have the same number of connections. This is because each neuron will connect only once to each of the layerINPUTs. The relationship between layerINPUTs and the connEntry values of a neuron are as follows.

layerINPUTs1:

layerINPUTs1:

cEn11 = layerINPUTs1 Neuron1.Connection1.connEntry

cEn21 = layerINPUTs1 Neuron2.Connection1.connEntry

cEn12 = layerINPUTs2 Neuron1.Connection2.connEntry

cEn22 = layerINPUTs2 Neuron2.Connection2.connEntry

Step3: Calculate the connExit values of each connection (including bias)

Neuron 1:

cEx11 = cEn11 x cW11

cEx12 = cEn12 x cW12

bEx1 = 1 x bW1

Neuron 2:

cEx21 = cEn21 x cW21

cEx22 = cEn22 x cW22

bEx2 = 1 x bW2

Step4: Calculate the NeuronInputValue for each neuron

Neuron1:

Neuron1.NeuronInputValue = cEx11 + cEx12 + bEx1

Neuron2:

Neuron2.NeuronInputValue = cEx21 + cEx22 + bEx2

Step5: Send the NeuronInputValues through an Activation function to produce a NeuronOutputValue

Neuron1:

Neuron1.NeuronOutputValue= 1/(1+EXP(-1 x Neuron1.NeuronInputValue))

Neuron2:

Neuron2.NeuronOutputValue= 1/(1+EXP(-1 x Neuron2.NeuronInputValue))

Please note that the NeuronInputValues for each neuron are different !

Step6: Send the NeuronOutputValues to the layer's actualOUTPUTs

- actualOUTPUT1 = Neuron1.NeuronOutputValue

- actualOUTPUT2 = Neuron2.NeuronOutputValue

The NeuronOutputValues become the actualOUTPUTs of this layer, which then become the layerINPUTs of the next layer. And the process is repeated over and over until you reach the final layer, where the actualOUTPUTs become the outputs of the entire neural network.

Here is the code for the Layer class:

processing code Layer Class

01

02

03

04

05

06

07

08

09

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

02

03

04

05

06

07

08

09

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

class Layer{

Neuron[] neurons = {};

float[] layerINPUTs={};

float[] actualOUTPUTs={};

float[] expectedOUTPUTs={};

float layerError;

float learningRate;

/* This is the default constructor for the Layer */

Layer(int numberConnections, int numberNeurons){

/* Add all the neurons and actualOUTPUTs to the layer */

for(int i=0; i<numberNeurons; i++){

Neuron tempNeuron = new Neuron(numberConnections);

addNeuron(tempNeuron);

addActualOUTPUT();

}

}

/* Function to add an input or output Neuron to this Layer */

void addNeuron(Neuron xNeuron){

neurons = (Neuron[]) append(neurons, xNeuron);

}

/* Function to get the number of neurons in this layer */

int getNeuronCount(){

return neurons.length;

}

/* Function to increment the size of the actualOUTPUTs array by one. */

void addActualOUTPUT(){

actualOUTPUTs = (float[]) expand(actualOUTPUTs,(actualOUTPUTs.length+1));

}

/* Function to set the ENTIRE expectedOUTPUTs array in one go. */

void setExpectedOUTPUTs(float[] tempExpectedOUTPUTs){

expectedOUTPUTs=tempExpectedOUTPUTs;

}

/* Function to clear ALL values from the expectedOUTPUTs array */

void clearExpectedOUTPUT(){

expectedOUTPUTs = (float[]) expand(expectedOUTPUTs, 0);

}

/* Function to set the learning rate of the layer */

void setLearningRate(float tempLearningRate){

learningRate=tempLearningRate;

}

/* Function to set the inputs of this layer */

void setInputs(float[] tempInputs){

layerINPUTs=tempInputs;

}

/* Function to convert ALL the Neuron input values into Neuron output values in this layer,

through a special activation function. */

void processInputsToOutputs(){

/* neuronCount is used a couple of times in this function. */

int neuronCount = getNeuronCount();

/* Check to make sure that there are neurons in this layer to process the inputs */

if(neuronCount>0) {

/* Check to make sure that the number of inputs matches the number of Neuron Connections. */

if(layerINPUTs.length!=neurons[0].getConnectionCount()){

println("Error in Layer: processInputsToOutputs: The number of inputs do NOT match the number of Neuron connections in this layer");

exit();

} else {

/* The number of inputs are fine : continue

Calculate the actualOUTPUT of each neuron in this layer,

based on their layerINPUTs (which were previously calculated).

Add the value to the layer's actualOUTPUTs array. */

for(int i=0; i<neuronCount;i++){

actualOUTPUTs[i]=neurons[i].getNeuronOutput(layerINPUTs);

}

}

}else{

println("Error in Layer: processInputsToOutputs: There are no Neurons in this layer");

exit();

}

}

/* Function to get the error of this layer */

float getLayerError(){

return layerError;

}

/* Function to set the error of this layer */

void setLayerError(float tempLayerError){

layerError=tempLayerError;

}

/* Function to increase the layerError by a certain amount */

void increaseLayerErrorBy(float tempLayerError){

layerError+=tempLayerError;

}

/* Function to calculate and set the deltaError of each neuron in the layer */

void setDeltaError(float[] expectedOutputData){

setExpectedOUTPUTs(expectedOutputData);

int neuronCount = getNeuronCount();

/* Reset the layer error to 0 before cycling through each neuron */

setLayerError(0);

for(int i=0; i<neuronCount;i++){

neurons[i].deltaError = actualOUTPUTs[i]*(1-actualOUTPUTs[i])*(expectedOUTPUTs[i]-actualOUTPUTs[i]);

/* Increase the layer Error by the absolute difference between the calculated value (actualOUTPUT) and the expected value (expectedOUTPUT). */

increaseLayerErrorBy(abs(expectedOUTPUTs[i]-actualOUTPUTs[i]));

}

}

/* Function to train the layer : which uses a training set to adjust the connection weights and biases of the neurons in this layer */

void trainLayer(float tempLearningRate){

setLearningRate(tempLearningRate);

int neuronCount = getNeuronCount();

for(int i=0; i<neuronCount;i++){

/* update the bias for neuron[i] */

neurons[i].bias += (learningRate * 1 * neurons[i].deltaError);

/* update the weight of each connection for this neuron[i] */

for(int j=0; j<neurons[i].getConnectionCount(); j++){

neurons[i].connections[j].weight += (learningRate * neurons[i].connections[j].connEntry * neurons[i].deltaError);

}

}

}

}

Within each layer, you have neuron(s) and their associated connection(s). Therefore it makes sense to have a constructor that automatically sets these up.

If you create a new Layer(2,3), this would automatically

- add 3 neurons to the layer

- create 2 connections for each neuron in this layer (to connect to the previous layer neurons).

- randomise each neuron bias and connection weights.

- add 3 actualOUTPUT slots to hold the neuron output values.

Here are the functions of the Layer class:

- addNeuron() : adds a neuron to the layer

- getNeuronCount() : returns the number of neurons in this layer

- addActualOUTPUT() : adds an actualOUTPUT slot to the layer.

- setExpectedOUTPUTs() : sets the entire expectedOUTPUTs array in one go.

- clearExpectedOUTPUT() : clear all values within the expectedOUTPUTs array.

- setLearningRate() : sets the learning rate of the layer.

- setInputs() : sets the inputs of the layer.

- processInputsToOutputs() : convert all layer input values into output values

- getLayerError() : returns the error of this layer

- setLayerError() : sets the error of this layer

- increaseLayerErrorBy() : increases the layer error by a specified amount.

- setDeltaError() : calculate and set the deltaError for each neuron in this layer

- trainLayer() : uses a training set to adjust the connection weights and biases of the neurons in this layer

There are a few functions mentioned above, which I have not yet discussed, and are used for neural network training (back-propagation). Don't worry, we'll go through them in the "back-propagation" section of the tutorial.

Next up: Neural Network (Part 4) : The Neural Network class

To go back to the table of contents click here